Ever since Alexander Graham Bell invented the telephone in 1876, the world has moved at a frenzied pace. While Graham Bell himself dismissed it as an object of distraction on his work desk, the human civilization, awed by its sheer potential of bringing in that virtual-ism in their lives, has accepted it gladly.

Nearly seventy years after the invention of the telephone, there was one more significant technological breakthrough. The invention of the computer gave us the power of replicating our thoughts and logical reasoning on a machine. While computers and telephony services evolved entirely separately during the early part of the 20th century (with computers being designed fundamentally to work as standalone systems), scientists and innovators soon realized the potential of a distributed computer network. What followed in the ensuing decades of the century was a convergence of computer network and telephony services giving birth to the term, ICT, which is the acronym for Information and Communication Technology.

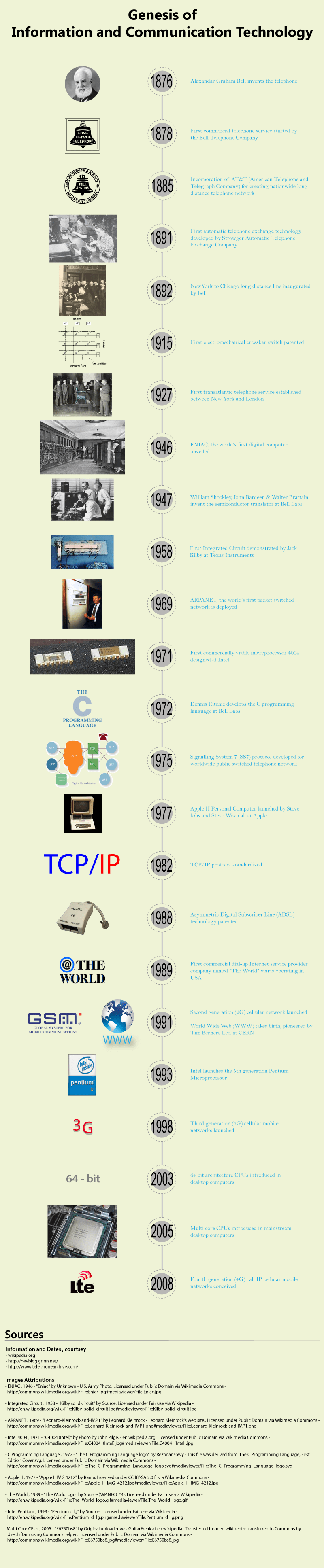

Evolution of ICT

Here is a depiction, in chronological order, of the most significant events which influenced the emergence of ICT since the invention of the telephone.

The Virtual Experience

With the advent of ICT, we now live in an era when we can talk to our friends and family instantly, no matter how far they are. We access and share all forms of information instantly with somebody, without meeting them. This virtual world was unthinkable in the earlier times in history. And now it has surrounded us in a way that we are getting imbibed by its pervasiveness more and more.

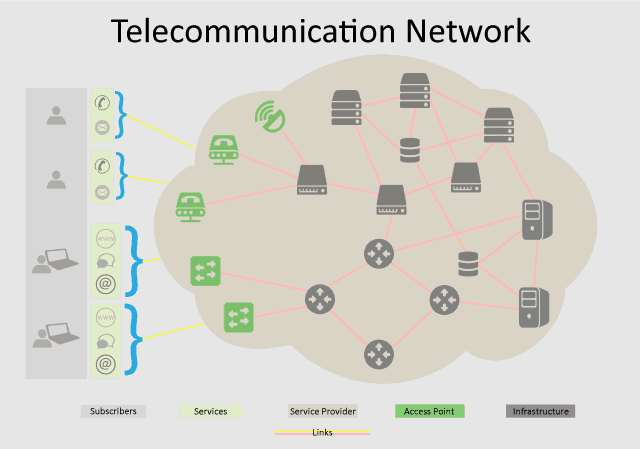

But think for a moment. Is this all magic? How does this virtual communication happen? Obviously, you as the user placing a phone call would only care about hearing the voice at the other end. But again, does it happen all by magic? We know very well that something is being orchestrated behind the scenes, by a set of connections (known as the links) of devices and equipment such as switches, routers, servers (known as the infrastructure). A company (known as telecom operator or service provider) owns and operates this infrastructure. These companies offer the service of making a call (known as services or applications) for us (the subscribers), via a set of access points . This whole arrangement constitutes what is called as a telecommunication network infrastructure.

Icons , courtesy http://icons8.com/

The Real Enabler Behind Virtualism

This telecom infrastructure is real, brick and mortar. Companies that raise and maintain it, face the same problems that were being faced by the human civilization before the genesis of ICT. It takes time and effort to raise physical spaces for housing infrastructure and interconnecting them. And as the infrastructure and the services expand, space as well as the cost becomes a constraint. This expansion is a never ending challenge for the telecom service provider. Which is why IaaS(Infrastructure as a Service) is leading another wave of virtualization over the cloud.

But what led to this expansion? One obvious reason is the ever increasing subscriber base. But there are a few specific reasons. Technological evolution and usage patterns are the main factors.

Some hard facts behind expansion

Let’s look as the five chief factors.

- Ever increasing applications/services: Forty years back the only applications of telecommunications were voice telephony and telegraphy. Today we have multimedia enabled computers and smartphones which consume voice, video, and data. As a result, users get an enriched experience in different ways. We have the ubiquitous web and a plethora of web and mobile apps, ranging from messaging, presence, location-based services, device communication, etc. The list just keeps growing every few years.

- Different quality of service requirements: A good quality voice call requires something known as a guaranteed bit rate. This is a sort of an assured bandwidth that the network provides to both the parties in the call for an intelligible conversation. But there are other classes of applications which do not need this guarantee. As an example, for file transfer from an FTP server, we do not need guaranteed bit rates but the maximum available bit rate so that the transfer can complete as soon as possible. But file transfer has less priority compared to a real-time voice call. So apart from bandwidth commitments, the network also needs to account for prioritization of applications. This is known as quality of service, and it varies from one class of application to other. We now have various applications with different quality of service expected from the network.

- Dynamic Traffic Behaviour: The telephone networks of yesteryear were static in nature. This means that every telephone call made by a subscriber caused the network to allocate and reserve the interconnected links between the caller and the called subscriber for the entire duration of the call. This is also known as circuit switching. But as the number of subscribers and applications grew, this approach did not scale well for the telecom operators. And with different applications needing different levels of quality of service, the static allocation becomes severely inefficient in handling scale.

- Wireless in last mile: We are moving towards a wireless world due to the very evident conveniences offered by mobility. But this also comes at the cost of increased network complexity. Not only does the network has to manage and track subscriber’s mobility, but it also has to ensure some minimum service guarantees for extreme cases where the subscriber is moving too fast or is at the edge of the coverage area where the wireless signal strength is weak.

- Insatiable desire to consume media: All progress in society happens as a result of the human need. And with the Internet and its associated applications and possibilities, the need is growing only towards one direction, i.e. increased bandwidth and more content. In the early days of Internet, we had dial-up Internet connections which served static HTML pages with text, graphics, applets and the possibility of downloading audio and video files. Now, we have HTML pages where we can embed audio, video, animations, interactive presentations, graphics and Immersive 3D experiences, all working in a complete sync to offer an ensemble of rich content never seen before. This requires more than a hundred-fold increase in bandwidth to be supported by the network.

So what is the impact of all this on the service provider?

There is only one answer for this, “Grow, Expand & Upgrade”. Else, Perish !!

More applications and bandwidth means that the service providers have to continuously grow their service offerings. They also need to expand their telecom infrastructure to increase their coverage area. If the core infrastructure is co-located in a physical space, then the chances are that they will quickly run out of space. Moreover, managing different quality of services & dynamic traffic behavior, catering to both wired and wireless infrastructure, is no longer possible with hardware only devices. They need software-based programmable logic to handle this complexity. For this, they need to upgrade to software defined/ programmable systems which can be deployed on demand, altered dynamically and can run on top of general purpose hardware.

So now what this leads to?

Since physical space always limits their expansion plans, service providers will find it more convenient to adopt network virtualization. They can converge their physical telecom network infrastructure with an on-demand cloud based network function virtualization system. This system can easily run on general purpose hardware running standard off the shelf software and operating systems.

But wait, we still have a problem

What about the connectivity and orchestration?

With physically co-located devices, the connectivity across the infrastructure can be locally managed over a private network. But with virtualization, all devices need to connect via the internet or some form of VPN. And if these devices keep shifting as a result of the expansion, then the IP addresses and underlying network topologies keep changing, leading to additional hassles of configuration and management.

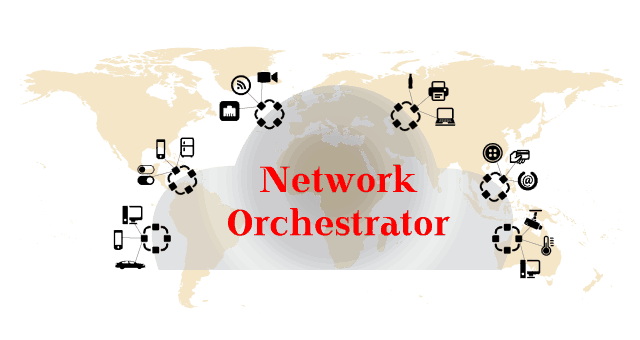

So for network virtualization to work over the Internet, what we need is a globally unique way of orchestrating the network resources and functions irrespective of whether it is deployed physical or virtually.

This is where the role of an orchestrator becomes very critical. The orchestrator is supposed to manage this mess of frequently changing network topologies and shifting network functions using a standards driven, rule-based, predictable business logic. It also has to ensure that there is no impact on network management and other auxiliary function.

The existence of orchestrator is reminiscent of the 19th century fictitious Ether theory when scientists believed that there exists an invisible substance everywhere called ‘Ether’ through which electromagnetic waves travel in the atmosphere.

This orchestrator acts as that omnipresent “ether.” Through it, the network functions just get deployed across the service provider’s infrastructure, either physically or virtually. Thereby completing the chain of links across the network which leads to delivery of a service from one end of the world to the other end. Indeed, we as subscribers also rely on this “ether” to connect virtually with other users across the world.

Icons , courtesy http://icons8.com/

Need for Network Virtualization ?

So why would a service provider need network virtualization?

- Load Balancing: The load on network functions and resources keep fluctuating a lot. This is based on seasonal demand, usage patterns, and also outage. It will be more efficient if load balancing can be managed in an autonomous way rather than leaving it to manual interventions. Network virtualization can help in the automation without any space constraints.

- Physical Constraints and Limitations: Managing physical network functions becomes prohibitive beyond a certain point. The time required for acquiring additional physical real estate is always many multiples of the time required to spawn virtual entities.

- Operational Flexibility: We have evolved from circuit switching to packet switching. Then why to restrict ourselves to a static network configuration which is averse to change and expansion. Network virtualization affords us the flexibility of managing and utilizing network functions just like packet switching.

- Cost vs Quality: Dynamic allocation of network functions will compensate for the additional cost involved in running an underutilized network infrastructure. This is same as a restaurant preparing dishes in anticipation of guests, while not enough of them turn up. Network Virtualization can help the service providers to avoid this costly expense. They can plan their infrastructure needs in such a way that they maintain only some critical minimal on-site deployment. Rest is all acquired on demand. This will also guard against running over utilized network functions which are difficult to expand on-site and may eventually lead to a poor quality of service.

Conclusion

No service provider in the world is immune to the ever changing scalability requirements in their network. These are largely governed by economic and seasonal behavior coupled with complex technological evolution patterns, beyond any body’s control. Deploying virtual infrastructure over cloud provides them a lot of flexibility in choosing to scale up or down according to the prevailing situation. The need of the hour is a standards-driven framework wherein the connectivity and management across the entire network infrastructure is seamless, whether it is purely virtual or real or a mix of both.