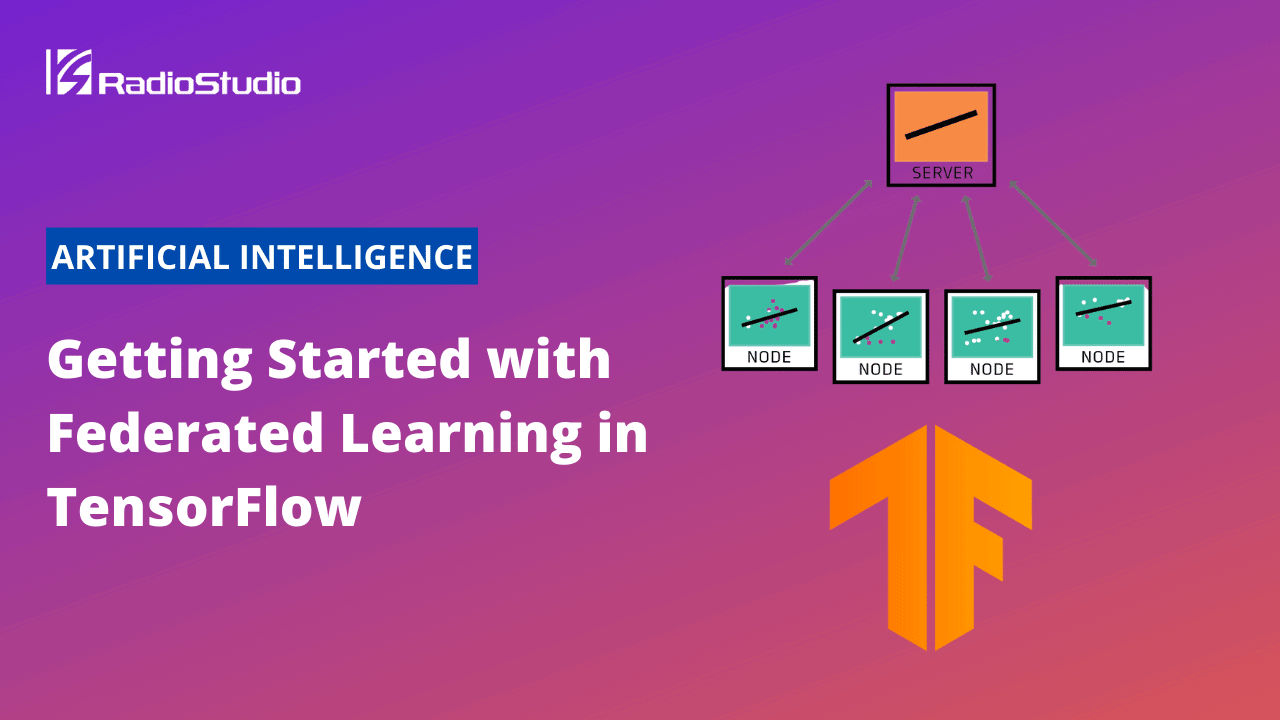

Federated learning (also known as collaborative learning) is a machine learning technique that trains an algorithm across multiple decentralized edge devices or servers holding local data samples, without exchanging them. This approach stands in contrast to traditional centralized machine learning techniques where all the local datasets are uploaded to one server, as well as to more classical decentralized approaches which often assume that local data samples are identically distributed. This article demonstrates how to get started with TensorFlow Federated, which is an open-source framework by Google used to implement Federated Learning.

This article was originally published by Tensorflow.

TensorFlow Federated

The TensorFlow Federated (TFF) platform consists of two layers:

- Federated Learning (FL), high-level interfaces to plug existing Keras or non-Keras machine learning models into the TFF framework. You can perform basic tasks, such as federated training or evaluation, without having to study the details of federated learning algorithms.

- Federated Core (FC), lower-level interfaces to concisely express custom federated algorithms by combining TensorFlow with distributed communication operators within a strongly-typed functional programming environment.

Start with the TFF tutorials that walk you through the main TFF concepts and APIs using practical examples. Make sure to follow the installation instructions to configure your environment for use with TFF.

The more detailed guides (see the left sidebar of this page) then provide reference information on important topics.

Explore Our Emerging Technology Use Case Index

Install TensorFlow Federated

There are a few ways to set up your environment to use TensorFlow Federated (TFF):

- The easiest way to learn and use TFF requires no installation; run the TensorFlow Federated tutorials directly in your browser using Google Colaboratory.

- To use TensorFlow Federated on a local machine, install the TFF package with Python’s

pippackage manager. - If you have a unique machine configuration, build the TFF package from source .

Install TensorFlow Federated using pip

1. Install the Python development environment.

sudo apt update

sudo apt install python3-dev python3-pip # Python 32. Create a virtual environment.

python3 -m venv "venv"

source "venv/bin/activate"

pip install --upgrade pipNote: To exit the virtual environment, run deactivate.

3. Install the released TensorFlow Federated Python package.

pip install --upgrade tensorflow-federated4. Test Tensorflow Federated.

python -c "import tensorflow_federated as tff; print(tff.federated_computation(lambda: 'Hello World')())"Success: The latest TensorFlow Federated Python package is now installed.

Build the TensorFlow Federated Python package from source

Building a TensorFlow Federated Python package from source is helpful when you want to:

- Make changes to TensorFlow Federated and test those changes in a component that uses TensorFlow Federated before those changes are submitted or released.

- Use changes that have been submitted to TensorFlow Federated but have not been released.

1. Install the Python development environment.

sudo apt update

sudo apt install python3-dev python3-pip # Python 32. Install Bazel.

Install Bazel, the build tool used to compile Tensorflow Federated.

3. Clone the Tensorflow Federated repository.

git clone https://github.com/tensorflow/federated.git

cd "federated"4. Build the TensorFlow Federated Python package.

mkdir "/tmp/tensorflow_federated"

bazel run //tensorflow_federated/tools/python_package:build_python_package -- \

--output_dir="/tmp/tensorflow_federated"5. Create a new project.

mkdir "/tmp/project"

cd "/tmp/project"6. Create a virtual environment.

python3 -m venv "venv"

source "venv/bin/activate"

pip install --upgrade pipNote: To exit the virtual environment run deactivate.

7. Install the TensorFlow Federated Python package.

pip install --upgrade "/tmp/tensorflow_federated/"*".whl"8. Test Tensorflow Federated.

python -c "import tensorflow_federated as tff; print(tff.federated_computation(lambda: 'Hello World')())"Success: A TensorFlow Federated Python package is now built from source and installed.

TFF simulations on GCP

This part of the tutorial will describe how to run TFF simulations on GCP.

Run a simulation on a single runtime container

1. Install and initialize the Cloud SDK..

2. Clone the TensorFlow Federated repository.

$ git clone https://github.com/tensorflow/federated.git$ cd "federated"3. Run a single runtime container.

Build a runtime container.

$ docker build \ --network=host \ --tag "/tff-runtime" \ --file "tensorflow_federated/tools/runtime/container/latest.Dockerfile" \ .Publish the runtime container.

$ docker push /tff-runtimeCreate a Compute Engine instance

- In the Cloud Console, go to the VM Instances page.

- Click Create instance.

- In the Firewall section, select Allow HTTP traffic and Allow HTTPS traffic.

- Click Create to create the instance.

ssh into the instance.

$ gcloud compute ssh Run the runtime container in the background.

$ docker run \ --detach \ --name=tff-runtime \ --publish=8000:8000 \ /tff-runtimeExit the instance.

$ exitGet the internal IP address of the instance.

This is used later as a parameter to our test script.

$ gcloud compute instances describe <instance> \

--format='get(networkInterfaces[0].networkIP)'

4. Run a simulation on a client container.

Build a client container.

$ docker build \ --network=host \ --tag "/tff-client" \ --file "tensorflow_federated/tools/client/latest.Dockerfile" \ .Publish the client container.

$ docker push /tff-clientCreate a Compute Engine instance

- In the Cloud Console, go to the VM Instances page.

- Click Create instance.

- In the Firewall section, select Allow HTTP traffic and Allow HTTPS traffic.

- Click Create to create the instance.

Copy your experiement to the Compute Engine instance.

$ gcloud compute scp \ "tensorflow_federated/tools/client/test.py" \ :~ssh into the instance.

$ gcloud compute ssh Run the client container interactively.

The string “Hello World” should print to the terminal.

$ docker run \ --interactive \ --tty \ --name=tff-client \ --volume ~/:/simulation \ --workdir /simulation \ /tff-client \ bashRun the Python script.

Using the internal IP address of the instance running the runtime container.

$ python3 test.py --host ''Exit the container.

$ exitExit the instance.

$ exitFederated Learning

Overview

This part of the tutorial introduces interfaces that facilitate federated learning tasks, such as federated training or evaluation with existing machine learning models implemented in TensorFlow. In designing these interfaces, our primary goal was to make it possible to experiment with federated learning without requiring the knowledge of how it works under the hood, and to evaluate the implemented federated learning algorithms on a variety of existing models and data. We encourage you to contribute back to the platform. TFF has been designed with extensibility and composability in mind, and we welcome contributions; we are excited to see what you come up with!

The interfaces offered by this layer consist of the following three key parts:

- Models. Classes and helper functions that allow you to wrap your existing models for use with TFF. Wrapping a model can be as simple as calling a single wrapping function (e.g.,

tff.learning.from_keras_model), or defining a subclass of thetff.learning.Modelinterface for full customizability. - Federated Computation Builders. Helper functions that construct federated computations for training or evaluation, using your existing models.

- Datasets. Canned collections of data that you can download and access in Python for use in simulating federated learning scenarios. Although federated learning is designed for use with decentralized data that cannot be simply downloaded at a centralized location, at the research and development stages it is often convenient to conduct initial experiments using data that can be downloaded and manipulated locally, especially for developers who might be new to the approach.

These interfaces are defined primarily in the tff.learning namespace, except for research data sets and other simulation-related capabilities that have been grouped in tff.simulation. This layer is implemented using lower-level interfaces offered by the Federated Core (FC), which also provides a runtime environment.

Before proceeding, we recommend that you first review the tutorials on image classification and text generation, as they introduce most of the concepts described here using concrete examples. If you’re interested in learning more about how TFF works, you may want to skim over the custom algorithms tutorial as an introduction to the lower-level interfaces we use to express the logic of federated computations, and to study the existing implementation of the tff.learning interfaces.

Models

Architectural assumptions

Serialization

TFF aims at supporting a variety of distributed learning scenarios in which the machine learning model code you write might be executing on a large number of heterogeneous clients with diverse capabilities. While at one end of the spectrum, in some applications those clients might be powerful database servers, many important uses our platform intends to support involve mobile and embedded devices with limited resources. We cannot assume that these devices are capable of hosting Python runtimes; the only thing we can assume at this point is that they are capable of hosting a local TensorFlow runtime. Thus, a fundamental architectural assumption we make in TFF is that your model code must be serializable as a TensorFlow graph.

You can (and should) still develop your TF code following the latest best practices like using eager mode. However, the final code must be serializable (e.g., can be wrapped as a tf.function for eager-mode code). This ensures that any Python state or control flow necessary at execution time can be serialized (possibly with the help of Autograph).

Currently, TensorFlow does not fully support serializing and deserializing eager-mode TensorFlow. Thus, serialization in TFF currently follows the TF 1.0 pattern, where all code must be constructed inside a tf.Graph that TFF controls. This means currently TFF cannot consume an already-constructed model; instead, the model definition logic is packaged in a no-arg function that returns a tff.learning.Model. This function is then called by TFF to ensure all components of the model are serialized. In addition, being a strongly-typed environment, TFF will require a little bit of additional metadata, such as a specification of your model’s input type.

Aggregation

We strongly recommend most users construct models using Keras, see the Converters for Keras section below. These wrappers handle the aggregation of model updates as well as any metrics defined for the model automatically. However, it may still be useful to understand how aggregation is handled for a general tff.learning.Model.

There are always at least two layers of aggregation in federated learning: local on-device aggregation, and cross-device (or federated) aggregation:

- Local aggregation. This level of aggregation refers to aggregation across multiple batches of examples owned by an individual client. It applies to both the model parameters (variables), which continue to sequentially evolve as the model is locally trained, as well as the statistics you compute (such as average loss, accuracy, and other metrics), which your model will again update locally as it iterates over each individual client’s local data stream. Performing aggregation at this level is the responsibility of your model code, and is accomplished using standard TensorFlow constructs. The general structure of processing is as follows:

- The model first constructs

tf.Variables to hold aggregates, such as the number of batches or the number of examples processed, the sum of per-batch or per-example losses, etc. - TFF invokes the

forward_passmethod on yourModelmultiple times, sequentially over subsequent batches of client data, which allows you to update the variables holding various aggregates as a side effect. - Finally, TFF invokes the

report_local_unfinalized_metricsmethod on your Model to allow your model to compile all the summary statistics it collected into a compact set of metrics to be exported by the client. This is where your model code may, for example, divide the sum of losses by the number of examples processed to export the average loss, etc.

- The model first constructs

- Federated aggregation. This level of aggregation refers to aggregation across multiple clients (devices) in the system. Again, it applies to both the model parameters (variables), which are being averaged across clients, as well as the metrics your model exported as a result of local aggregation. Performing aggregation at this level is the responsibility of TFF. As a model creator, however, you can control this process (more on this below). The general structure of processing is as follows:

- The initial model, and any parameters required for training, are distributed by a server to a subset of clients that will participate in a round of training or evaluation.

- On each client, independently and in parallel, your model code is invoked repeatedly on a stream of local data batches to produce a new set of model parameters (when training), and a new set of local metrics, as described above (this is local aggregation).

- TFF runs a distributed aggregation protocol to accumulate and aggregate the model parameters and locally exported metrics across the system. This logic is expressed in a declarative manner using TFF’s own federated computation language (not in TensorFlow). See the custom algorithms tutorial for more on the aggregation API.

Abstract interfaces

This basic constructor + metadata interface is represented by the interface tff.learning.Model, as follows:

- The constructor,

forward_pass, andreport_local_unfinalized_metricsmethods should construct model variables, forward pass, and statistics you wish to report, correspondingly. The TensorFlow constructed by those methods must be serializable, as discussed above. - The

input_specproperty, as well as the 3 properties that return subsets of your trainable, non-trainable, and local variables represent the metadata. TFF uses this information to determine how to connect parts of your model to the federated optimization algorithms, and to define internal type signatures to assist in verifying the correctness of the constructed system (so that your model cannot be instantiated over data that does not match what the model is designed to consume).

In addition, the abstract interface tff.learning.Model exposes a property metric_finalizers that takes in a metric’s unfinalized values (returned by report_local_unfinalized_metrics()) and returns the finalized metric values. The metric_finalizers and report_local_unfinalized_metrics() method will be used together to build a cross-client metrics aggregator when defining the federated training processes or evaluation computations. For example, a simple tff.learning.metrics.sum_then_finalize aggregator will first sum the unfinalized metric values from clients, and then call the finalizer functions at the server.

You can find examples of how to define your own custom tff.learning.Model in the second part of our image classification tutorial, as well as in the example models we use for testing in model_examples.py.

Converters for Keras

Nearly all the information that’s required by TFF can be derived by calling tf.keras interfaces, so if you have a Keras model, you can rely on tff.learning.from_keras_model to construct a tff.learning.Model.

Note that TFF still wants you to provide a constructor – a no-argument model function such as the following:

def model_fn(): keras_model = ... return tff.learning.from_keras_model(keras_model, sample_batch, loss=...)

In addition to the model itself, you supply a sample batch of data which TFF uses to determine the type and shape of your model’s input. This ensures that TFF can properly instantiate the model for the data that will actually be present on client devices (since we assume this data is not generally available at the time you are constructing the TensorFlow to be serialized).

The use of Keras wrappers is illustrated in our image classification and text generation tutorials.

Federated Computation Builders

The tff.learning package provides several builders for tff.Computations that perform learning-related tasks; we expect the set of such computations to expand in the future.

Architectural assumptions

Execution

There are two distinct phases in running a federated computation.

- Compile: TFF first compiles federated learning algorithms into an abstract serialized representation of the entire distributed computation. This is when TensorFlow serialization happens, but other transformations can occur to support more efficient execution. We refer to the serialized representation emitted by the compiler as a federated computation.

- Execute TFF provides ways to execute these computations. For now, execution is only supported via a local simulation (e.g., in a notebook using simulated decentralized data).

A federated computation generated by TFF’s Federated Learning API, such as a training algorithm that uses federated model averaging, or a federated evaluation, includes a number of elements, most notably:

- A serialized form of your model code as well as additional TensorFlow code constructed by the Federated Learning framework to drive your model’s training/evaluation loop (such as constructing optimizers, applying model updates, iterating over

tf.data.Datasets, and computing metrics, and applying the aggregated update on the server, to name a few). - A declarative specification of the communication between the clients and a server (typically various forms of aggregation across the client devices, and broadcasting from the server to all clients), and how this distributed communication is interleaved with the client-local or server-local execution of TensorFlow code.

The federated computations represented in this serialized form are expressed in a platform-independent internal language distinct from Python, but to use the Federated Learning API, you won’t need to concern yourself with the details of this representation. The computations are represented in your Python code as objects of type tff.Computation, which for the most part you can treat as opaque Python callables.

In the tutorials, you will invoke those federated computations as if they were regular Python functions, to be executed locally. However, TFF is designed to express federated computations in a manner agnostic to most aspects of the execution environment, so that they can potentially be deployable to, e.g., groups of devices running Android, or to clusters in a datacenter. Again, the main consequence of this are strong assumptions about serialization. In particular, when you invoke one of the build_... methods described below the computation is fully serialized.

Modeling state

TFF is a functional programming environment, yet many processes of interest in federated learning are stateful. For example, a training loop that involves multiple rounds of federated model averaging is an example of what we could classify as a stateful process. In this process, the state that evolves from round to round includes the set of model parameters that are being trained, and possibly additional state associated with the optimizer (e.g., a momentum vector).

Since TFF is functional, stateful processes are modeled in TFF as computations that accept the current state as an input and then provide the updated state as an output. In order to fully define a stateful process, one also needs to specify where the initial state comes from (otherwise we cannot bootstrap the process). This is captured in the definition of the helper class tff.templates.IterativeProcess, with the 2 properties initialize and next corresponding to the initialization and iteration, respectively.

Available builders

At the moment, TFF provides two builder functions that generate the federated computations for federated training and evaluation:

tff.learning.build_federated_averaging_processtakes a model function and a client optimizer, and returns a statefultff.templates.IterativeProcess.tff.learning.build_federated_evaluationtakes a model function and returns a single federated computation for federated evaluation of models, since evaluation is not stateful.

Datasets

Architectural assumptions

Client selection

In the typical federated learning scenario, we have a large population of potentially hundreds of millions of client devices, of which only a small portion may be active and available for training at any given moment (for example, this may be limited to clients that are plugged in to a power source, not on a metered network, and otherwise idle). Generally, the set of clients available to participate in training or evaluation is outside of the developer’s control. Furthermore, as it’s impractical to coordinate millions of clients, a typical round of training or evaluation will include only a fraction of the available clients, which may be sampled at random.

The key consequence of this is that federated computations, by design, are expressed in a manner that is oblivious to the exact set of participants; all processing is expressed as aggregate operations on an abstract group of anonymous clients, and that group might vary from one round of training to another. The actual binding of the computation to the concrete participants, and thus to the concrete data they feed into the computation, is thus modeled outside of the computation itself.

In order to simulate a realistic deployment of your federated learning code, you will generally write a training loop that looks like this:

trainer = tff.learning.build_federated_averaging_process(...)state = trainer.initialize()federated_training_data = ...def sample(federate_data): return ...while True: data_for_this_round = sample(federated_training_data) state, metrics = trainer.next(state, data_for_this_round)

In order to facilitate this, when using TFF in simulations, federated data is accepted as Python lists, with one element per participating client device to represent that device’s local tf.data.Dataset.

Abstract interfaces

In order to standardize dealing with simulated federated data sets, TFF provides an abstract interface tff.simulation.datasets.ClientData, which allows one to enumerate the set of clients, and to construct a tf.data.Dataset that contains the data of a particular client. Those tf.data.Datasets can be fed directly as input to the generated federated computations in eager mode.

It should be noted that the ability to access client identities is a feature that’s only provided by the datasets for use in simulations, where the ability to train on data from specific subsets of clients may be needed (e.g., to simulate the diurnal avaiablity of different types of clients). The compiled computations and the underlying runtime do not involve any notion of client identity. Once data from a specific subset of clients has been selected as an input, e.g., in a call to tff.templates.IterativeProcess.next, client identities no longer appear in it.

Available data sets

We have dedicated the namespace tff.simulation.datasets for datasets that implement the tff.simulation.datasets.ClientData interface for use in simulations, and seeded it with datasets to support the image classification and text generation tutorials. We’d like to encourage you to contribute your own datasets to the platform.

Federated Core

This part of the tutorial introduces the core layer of TFF that serves as a foundation for Federated Learning, and possible future non-learning federated algorithms.

For a gentle introduction to Federated Core, please read the following tutorials, as they introduce some of the fundamental concepts by example and demonstrate step-by-step the construction of a simple federated averaging algorithm.

- Custom Federated Algorithms, Part 1: Introduction to the Federated Core.

- Custom Federated Algorithms, Part 2: Implementing Federated Averaging.

We would also encourage you to familiarize yourself with Federated Learning and the associated tutorials on image classification and text generation, as the uses of the Federated Core API (FC API) for federated learning provide important context for some of the choices we’ve made in designing this layer.

Overview

Goals, Intended Uses, and Scope

Federated Core (FC) is best understood as a programming environment for implementing distributed computations, i.e., computations that involve multiple computers (mobile phones, tablets, embedded devices, desktop computers, sensors, database servers, etc.) that may each perform non-trivial processing locally, and communicate across the network to coordinate their work.

The term distributed is very generic, and TFF does not target all possible types of distributed algorithms out there, so we prefer to use the less generic term federated computation to describe the types of algorithms that can be expressed in this framework.

While defining the term federated computation in a fully formal manner is outside the scope of this document, think of the types of algorithms you might see expressed in pseudocode in a research publication that describes a new distributed learning algorithm.

The goal of FC, in a nusthell, is to enable similarly compact representation, at a similar pseudocode-like level of abstraction, of program logic that is not pseudocode, but rather, that’s executable in a variety of target environments.

The key defining characteristic of the kinds of algorithms that FC is designed to express is that actions of system participants are described in a collective manner. Thus, we tend to talk about each device locally transforming data, and the devices coordinating work by a centralized coordinator broadcasting, collecting, or aggregating their results.

While TFF has been designed to be able to go beyond simple client-server architectures, the notion of collective processing is fundamental. This is due to the origins of TFF in federated learning, a technology originally designed to support computations on potentially sensitive data that remains under control of client devices, and that may not be simply downloaded to a centralized location for privacy reasons. While each client in such systems contributes data and processing power towards computing a result by the system (a result that we would generally expect to be of value to all the participants), we also strive at preserving each client’s privacy and anonymity.

Thus, while most frameworks for distributed computing are designed to express processing from the perspective of individual participants – that is, at the level of individual point-to-point message exchanges, and the interdependence of the participant’s local state transitions with incoming and outgoing messages, TFF’s Federated Core is designed to describe the behavior of the system from the global system-wide perspective (similarly to, e.g., MapReduce).

Consequently, while distributed frameworks for general purposes may offer operations such as send and receive as building blocks, FC provides building blocks such as tff.federated_sum, tff.federated_reduce, or tff.federated_broadcast that encapsulate simple distributed protocols.

Language

Python Interface

TFF uses an internal language to represent federated computations, the syntax of which is defined by the serializable representation in computation.proto. Users of FC API generally won’t need to interact with this language directly, though. Rather, we provide a Python API (the tff namespace) that wraps arounds it as a way to define computations.

Specifically, TFF provides Python function decorators such as tff.federated_computation that trace the bodies of the decorated functions, and produce serialized representations of the federated computation logic in TFF’s language. A function decorated with tff.federated_computation acts as a carrier of such serialized representation, and can embed it as a building block in the body of another computation, or execute it on demand when invoked.

Here’s just one example; more examples can be found in the custom algorithms tutorials.

@tff.federated_computation(tff.type_at_clients(tf.float32))def get_average_temperature(sensor_readings): return tff.federated_mean(sensor_readings)

Readers familiar with non-eager TensorFlow will find this approach analogous to writing Python code that uses functions such as tf.add or tf.reduce_sum in a section of Python code that defines a TensorFlow graph. Albeit the code is technically expressed in Python, its purpose is to construct a serializable representation of a tf.Graph underneath, and it is the graph, not the Python code, that is internally executed by the TensorFlow runtime. Likewise, one can think of tff.federated_mean as inserting a federated op into a federated computation represented by get_average_temperature.

A part of the reason for FC defining a language has to do with the fact that, as noted above, federated computations specify distributed collective behaviors, and as such, their logic is non-local. For example, TFF provides operators, inputs and outputs of which may exist in different places in the network.

This calls for a language and a type system that capture the notion of distributedness.

Type System

Federated Core offers the following categories of types. In describing these types, we point to the type constructors as well as introduce a compact notation, as it’s a handy way or describing types of computations and operators.

First, here are the categories of types that are conceptually similar to those found in existing mainstream languages:

- Tensor types (

tff.TensorType). Just as in TensorFlow, these havedtypeandshape. The only difference is that objects of this type are not limited totf.Tensorinstances in Python that represent outputs of TensorFlow ops in a TensorFlow graph, but may also include units of data that can be produced, e.g., as an output of a distributed aggregation protocol. Thus, the TFF tensor type is simply an abstract version of a concrete physical representation of such type in Python or TensorFlow. TFF’sTensorTypescan be stricter in their (static) treatment of shapes than TensorFlow. For example, TFF’s typesystem treats a tensor with unknown rank as assignable from any other tensor of the samedtype, but not assignable to any tensor with fixed rank. This treatment prevents certain runtime failures (e.g., attempting to reshape a tensor of unknown rank into a shape with incorrect number of elements), at the cost of greater strictness in what computations TFF accepts as valid. The compact notation for tensor types isdtypeordtype[shape]. For example,int32andint32[10]are the types of integers and int vectors, respectively. - Sequence types (

tff.SequenceType). These are TFF’s abstract equivalent of TensorFlow’s concrete concept oftf.data.Datasets. Elements of sequences can be consumed in a sequential manner, and can include complex types. The compact representation of sequence types isT*, whereTis the type of elements. For exampleint32*represents an integer sequence. - Named tuple types (

tff.StructType). These are TFF’s way of constructing tuples and dictionary-like structures that have a predefined number of elements with specific types, named or unnamed. Importantly, TFF’s named tuple concept encompasses the abstract equivalent of Python’s argument tuples, i.e., collections of elements of which some, but not all are named, and some are positional. The compact notation for named tuples is<n_1=T_1, ..., n_k=T_k>, wheren_kare optional element names, andT_kare element types. For example,<int32,int32>is a compact notation for a pair of unnamed integers, and<X=float32,Y=float32>is a compact notation for a pair of floats namedXandYthat may represent a point on a plane. Tuples can be nested as well as mixed with other types, e.g.,<X=float32,Y=float32>*would be a compact notation for a sequence of points. - Function types (

tff.FunctionType). TFF is a functional programming framework, with functions treated as first-class values. Functions have at most one argument, and exactly one result. The compact notation for functions is(T -> U), whereTis the type of an argument, andUis the type of the result, or( -> U)if there’s no argument (although no-argument functions are a degenerate concept that exists mostly just at the Python level). For example(int32* -> int32)is a notation for a type of functions that reduce an integer sequence to a single integer value.

The following types address the distributed systems aspect of TFF computations. As these concepts are somewhat unique to TFF, we encourage you to refer to the custom algorithms tutorial for additional commentary and examples.

- Placement type. This type is not yet exposed in the public API other than in the form of 2 literals

tff.SERVERandtff.CLIENTSthat you can think of as constants of this type. It is used internally, however, and will be introduced in the public API in future releases. The compact representation of this type isplacement. A placement represents a collective of system participants that play a particular role. The initial release is targeting client-server computations, in which there are 2 groups of participants: clients and a server (you can think of the latter as a singleton group). However, in more elaborate architectures, there could be other roles, such as intermediate aggregators in a multi-tiered system, who might be performing different types of aggregation, or use different types of data compression/decompression than those used by either the server or the clients. The primary purpose of defining the notion of placements is as a basis for defining federated types. - Federated types (

tff.FederatedType). A value of a federated type is one that is hosted by a group of system participants defined by a specific placement (such astff.SERVERortff.CLIENTS). A federated type is defined by the placement value (thus, it is a dependent type), the type of member constituents (what kind of content each of the participants is locally hosting), and the additional bitall_equalthat specifies whether all participants are locally hosting the same item. The compact notation for federated type of values that include items (member constituents) of typeT, each hosted by group (placement)GisT@Gor{T}@Gwith theall_equalbit set or not set, respectively. For example:{int32}@CLIENTSrepresents a federated value that consists of a set of potentially distinct integers, one per client device. Note that we are talking about a single federated value as encompassing multiple items of data that appear in multiple locations across the network. One way to think about it is as a kind of tensor with a “network” dimension, although this analogy is not perfect because TFF does not permit random access to member constituents of a federated value.{<X=float32,Y=float32>*}@CLIENTSrepresents a federated data set, a value that consists of multiple sequences ofXYcoordinates, one sequence per client device.<weights=float32[10,5],bias=float32[5]>@SERVERrepresents a named tuple of weight and bias tensors at the server. Since we’ve dropped the curly braces, this indicates theall_equalbit is set, i.e., there’s only a single tuple (regardless of how many server replicas there might be in a cluster hosting this value).

Building Blocks

The language of Federated Core is a form of lambda-calculus, with a few additional elements.

It provides the following programing abstractions currently exposed in the public API:

- TensorFlow computations (

tff.tf_computation). These are sections of TensorFlow code wrapped as reusable components in TFF using thetff.tf_computationdecorator. They always have functional types, and unlike functions in TensorFlow, they can take structured parameters or return structured results of a sequence type. Here’s one example, a TF computation of type(int32* -> int)that uses thetf.data.Dataset.reduceoperator to compute a sum of integers:

@tff.tf_computation(tff.SequenceType(tf.int32))def add_up_integers(x): return x.reduce(np.int32(0), lambda x, y: x + y)

Intrinsics or federated operators (tff.federated_...). This is a library of functions such as tff.federated_sum or tff.federated_broadcast that constitute the bulk of FC API, most of which represent distributed communication operators for use with TFF.

We refer to these as intrinsics because, somewhat like intrinsic functions, they are an open-ended, extensible set of operators that are understood by TFF, and compiled down into lower-level code.

Most of these operators have parameters and results of federated types, and most are templates that can be applied to various kinds of data.

For example, tff.federated_broadcast can be thought of as a template operator of a functional type T@SERVER -> T@CLIENTS.

Lambda expressions (tff.federated_computation). A lambda expression in TFF is the equivalent of a lambda or def in Python; it consists of the parameter name, and a body (expression) that contains references to this parameter.

In Python code, these can be created by decorating Python functions with tff.federated_computation and defining an argument.

Here’s an example of a lambda expression we’ve already mentioned earlier:

@tff.federated_computation(tff.type_at_clients(tf.float32))def get_average_temperature(sensor_readings): return tff.federated_mean(sensor_readings)

Placement literals. For now, only tff.SERVER and tff.CLIENTS to allow for defining simple client-server computations.

Function invocations (__call__). Anything that has a functional type can be invoked using the standard Python __call__ syntax. The invocation is an expression, the type of which is the same as the type of the result of the function being invoked.

For example:

add_up_integers(x)represents an invocation of the TensorFlow computation defined earlier on an argumentx. The type of this expression isint32.tff.federated_mean(sensor_readings)represents an invocation of the federated averaging operator onsensor_readings. The type of this expression isfloat32@SERVER(assuming context from the example above).

Forming tuples and selecting their elements. Python expressions of the form [x, y], x[y], or x.y that appear in the bodies of functions decorated with tff.federated_computation.

Using TFF for Federated Learning Research

Overview

TFF is an extensible, powerful framework for conducting federated learning (FL) research by simulating federated computations on realistic proxy datasets. This page describes the main concepts and components that are relevant for research simulations, as well as detailed guidance for conducting different kinds of research in TFF.

The Typical Structure of Research Code in TFF

A research FL simulation implemented in TFF typically consists of three main types of logic.

- Individual pieces of TensorFlow code, typically

tf.functions, that encapsulate logic that runs in a single location (e.g., on clients or on a server). This code is typically written and tested without anytff.*references, and can be re-used outside of TFF. For example, the client training loop in Federated Averaging is implemented at this level. - TensorFlow Federated orchestration logic, which binds together the individual

tf.functions from 1. by wrapping them astff.tf_computations and then orchestrating them using abstractions liketff.federated_broadcastandtff.federated_meaninside atff.federated_computation. See, for example, this orchestration for Federated Averaging. - An outer driver script that simulates the control logic of a production FL system, selecting simulated clients from a dataset and then executing federated computations defined in 2. on those clients. For example, a Federated EMNIST experiment driver.

Federated learning datasets

TensorFlow federated hosts multiple datasets that are representative of the characteristics of real-world problems that could be solved with federated learning.Note: These datasets can also be consumed by any Python-based ML framework as Numpy arrays, as documented in the ClientData API.

Datasets include:

- StackOverflow. A realistic text dataset for language modeling or supervised learning tasks, with 342,477 unique users with 135,818,730 examples (sentences) in the training set.

- Federated EMNIST. A federated pre-processing of the EMNIST character and digit dataset, where each client corresponds to a different writer. The full train set contains 3400 users with 671,585 examples from 62 labels.

- Shakespeare. A smaller char-level text dataset based on the complete works of William Shakespeare. The data set consists of 715 users (characters of Shakespeare plays), where each example corresponds to a contiguous set of lines spoken by the character in a given play.

- CIFAR-100. A federated partitioning of the CIFAR-100 dataset across 500 training clients and 100 test clients. Each client has 100 unique examples. The partitioning is done in a way to create more realistic heterogeneity between clients. For more details, see the API.

- Google Landmark v2 dataset The dataset consists of photos of various world landmarks, with images grouped by photographer to achieve a federated partitioning of the data. Two flavors of dataset are available: a smaller dataset with 233 clients and 23080 images, and a larger dataset with 1262 clients and 164172 images.

- CelebA A dataset of examples (image and facial attributes) of celebrity faces. The federated dataset has each celebrity’s examples grouped together to form a client. There are 9343 clients, each with at least 5 examples. The dataset can be split into train and test groups either by clients or by examples.

- iNaturalist A dataset consists of photos of various species. The dataset contains 120,300 images for 1,203 species. Seven flavors of the dataset are available. One of them is grouped by the photographer and it consists of 9257 clients. The rest of the datasets are grouped by the geo location where the photo was taken. These six flavors of the dataset consists of 11 – 3,606 clients.

High performance simulations

While the wall-clock time of an FL simulation is not a relevant metric for evaluating algorithms (as simulation hardware isn’t representative of real FL deployment environments), being able to run FL simulations quickly is critical for research productivity. Hence, TFF has invested heavily in providing high-performance single and multi-machine runtimes. Documentation is under development, but for now see the High-performance simulations with TFF tutorial, instructions on TFF simulations with accelerators, and instructions on setting up simulations with TFF on GCP. The high-performance TFF runtime is enabled by default.

TFF for different research areas

Federated optimization algorithms

Research on federated optimization algorithms can be done in different ways in TFF, depending on the desired level of customization.

A minimal stand-alone implementation of the Federated Averaging algorithm is provided here. The code includes TF functions for local computation, TFF computations for orchestration, and a driver script on the EMNIST dataset as an example. These files can easily be adapted for customized applciations and algorithmic changes following detailed instructions in the README.

A more general implementation of Federated Averaging can be found here. This implementation allows for more sophisticated optimization techniques, including the use of different optimizers on both the server and client. Other federated learning algorithms, including federated k-means clustering, can be found here.

Model and update compression

TFF uses the tensor_encoding API to enable lossy compression algorithms to reduce communicatation costs between the server and clients. For an example of training with server-to-client and client-to-server compression using Federated Averaging algorithm, see this experiment.

To implement a custom compression algorithm and apply it to the training loop, you can:

- Implement a new compression algorithm as a subclass of

EncodingStageInterfaceor its more general variant,AdaptiveEncodingStageInterfacefollowing this example. - Construct your new

Encoderand specialize it for model broadcast or model update averaging. - Use those objects to build the entire training computation.

Differential privacy

TFF is interoperable with the TensorFlow Privacy library to enable research in new algorithms for federated training of models with differential privacy. For an example of training with DP using the basic DP-FedAvg algorithm and extensions, see this experiment driver.

If you want to implement a custom DP algorithm and apply it to the aggregate updates of federated averaging, you can implement a new DP mean algorithm as a subclass of tensorflow_privacy.DPQuery and create a tff.aggregators.DifferentiallyPrivateFactory with an instance of your query. An example of implementing the DP-FTRL algorithm can be found here

Federated GANs (described below) are another example of a TFF project implementing user-level differential privacy (e.g., here in code).

Robustness and attacks

TFF can also be used to simulate the targeted attacks on federated learning systems and differential privacy based defenses considered in Can You Really Back door Federated Learning?. This is done by building an iterative process with potentially malicious clients (see build_federated_averaging_process_attacked). The targeted_attack directory contains more details.

- New attacking algorithms can be implemented by writing a client update function which is a Tensorflow function, see

ClientProjectBoostfor an example. - New defenses can be implemented by customizing ‘tff.utils.StatefulAggregateFn’ which aggregates client outputs to get a global update.

For an example script for simulation, see emnist_with_targeted_attack.py.

Generative Adversarial Networks

GANs make for an interesting federated orchestration pattern that looks a little different than standard Federated Averaging. They involve two distinct networks (the generator and the discriminator) each trained with their own optimization step.

TFF can be used for research on federated training of GANs. For example, the DP-FedAvg-GAN algorithm presented in recent work is implemented in TFF. This work demonstrates the effectiveness of combining federated learning, generative models, and differential privacy.

Personalization

Personalization in the setting of federated learning is an active research area. The goal of personalization is to provide different inference models to different users. There are potentially different approaches to this problem.

One approach is to let each client fine-tune a single global model (trained using federated learning) with their local data. This approach has connections to meta-learning, see, e.g., this paper. An example of this approach is given in emnist_p13n_main.py. To explore and compare different personalization strategies, you can:

- Define a personalization strategy by implementing a

tf.functionthat starts from an initial model, trains and evaluates a personalized model using each client’s local datasets. An example is given bybuild_personalize_fn. - Define an

OrderedDictthat maps strategy names to the corresponding personalization strategies, and use it as thepersonalize_fn_dictargument intff.learning.build_personalization_eval.

Another approach is to avoid training a fully global model by training part of a model entirely locally. An instantiation of this approach is described in this blog post. This approach is also connected to meta learning, see this paper. To explore partially local federated learning, you can:

- Check out the tutorial for a complete code example applying Federated Reconstruction and follow-up exercises.

- Create a partially local training process using

tff.learning.reconstruction.build_training_process, modifyingdataset_split_fnto customize process behavior.