There are many occasions where visual content delivers more engaging and enriching experiences than the typical data-driven approach. Using IBM Watson Visual Recognition service, you can analyze and extract objects from images to gather quantitative data.

In this tutorial, you’ll leverage Watson Visual Recognition to build a worldwide campaign to experience the power of visually-driven data stories. The tutorial will show you how to leverage the IBM Watson Visual Recognition capabilities to build an interactive, image-driven storyline.

Note: This blog post was originally published in IBM Bluemix.

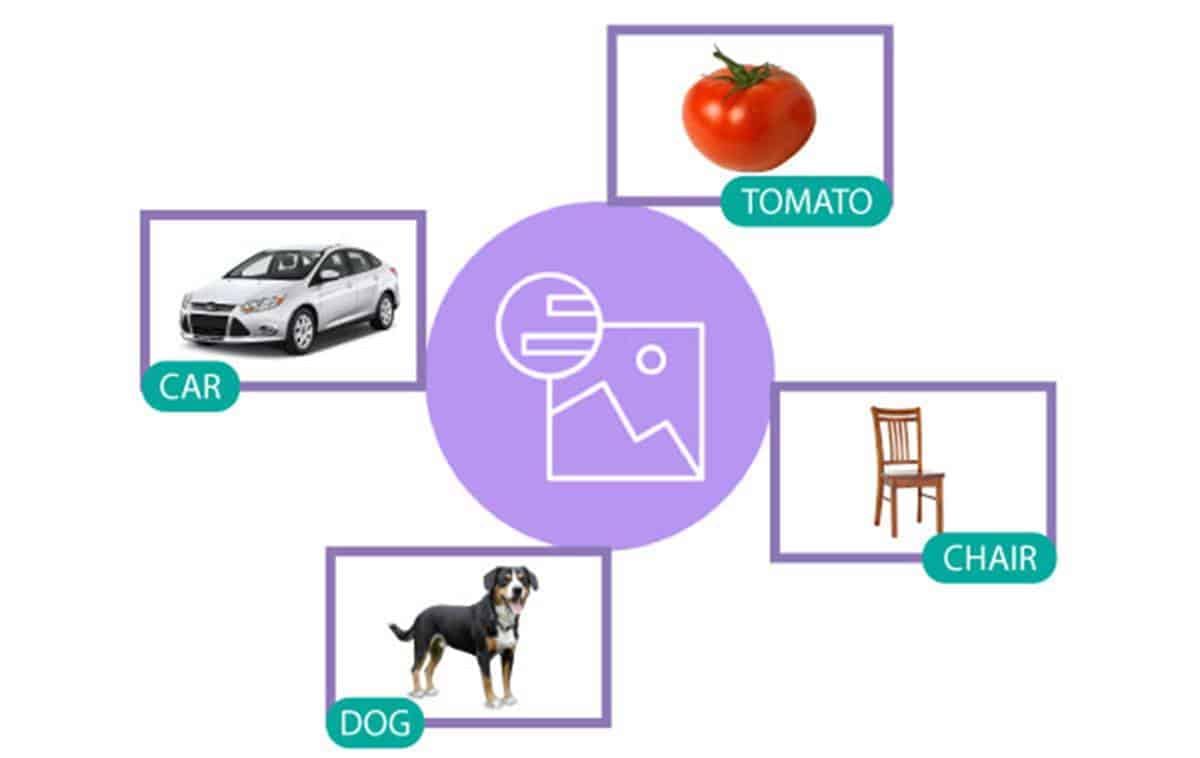

Overview of Watson Visual Recognition

The Watson Visual Recognition API is an intelligent system that uses image recognition to identify and tag objects from images. This Watson service has built-in default classifiers that can detect common objects, as well as things within an image, that are recognized or associated with, in people’s daily lives.

Additionally, you can build custom classifiers by uploading training images to build an application that can detect and tag images from one among the custom classes.

You can try out the Visual Recognition service demo to witness the capabilities of this system.

Converting Image to Data: Storytelling with a Twist

In the field of exploratory data analysis, extracting the relevant tags from an image can give you a lot of valuable data. This data can be the fuel for building visually driven stories that offer a unique perspective to enhance your understanding of the world.

Let’s take a hypothetical scenario. Imagine that you are living in a world where there has been a decline in the dog population due to some unfortunate reason. Animal extinction is not a new phenomenon for the world. But how do you deal with such a tragic world event, especially when it comes to dogs, widely considered to be the man’s best friend? Something needs to be done about this.

Dog Tracker: An interactive, crowd collaborated, visual repository of dog breeds

To increase the awareness about this issue, you can leverage the power of the Internet to launch a worldwide virtual preservation campaign for dogs.

First, you want to spread awareness about this alarming situation and encourage people to click and share the pictures of a dog wherever they discover one. In this way, you build a worldwide map of all the remaining dog breeds, and everybody can contribute to this campaign.

Sounds a bit dramatic? Well, this may seem too far fetched, but just ignore the antics for now and focus on building this campaign with IBM Bluemix services.

Approach to Building the Dog Tracker Online Campaign

If you are a developer, then you already might be thinking of building an app to host this campaign. At a bare minimum, you need an app to upload dog images and display the map. Instead of relying on the users to label the breed of the dogs that they discover, you can use Watson Visual Recognition. It has built-in classifiers that will play a key role in identifying the dog breeds from all the submitted images.

Since this will be a global collaborative effort hosted in the Internet, you need:

Image storage service: To store all the submitted images and access them for performing visual identification of dog breed.

Server component: To host the app and orchestrate the submission, tagging and display of images.

IBM Cloud Object Storage is a Bluemix service that can be leveraged to house data in one common location. Application developers can upload files and can run Bluemix services that access those files for further processing and analysis. For Dog Tracker, you can use this to store and access the dog images submitted by users.

Instead of hosting a full blown server, you can take advantage of microservices. PubNub BLOCKS offers a lightweight microservice that can be spawned off within seconds and can be programmed to run a Javascript-based business logic while interacting with third party REST services. PubNub BLOCKS is part of the PubNub global data stream network that enables many data-driven applications around the Web.

Note: PubNub BLOCKs is now rebranded as PubNub Functions.

Getting Started with Dog Tracker

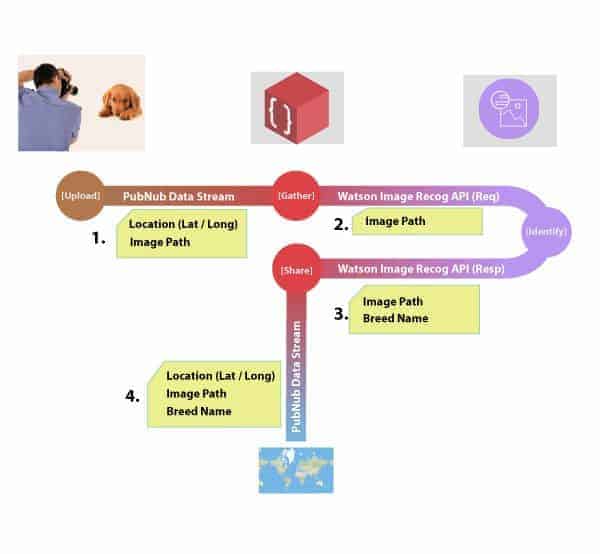

So given the decision to use IBM Cloud Object Storage and PubNub BLOCKS, the architecture of the app looks somewhat like this.

![]()

Users discover a dog, take a snap and upload it on IBM Cloud Object Storage via the app. Along with uploading the image, the app also triggers the PubNub BLOCK, passing the location of the image in object storage. BLOCKS then orchestrates the identification of dog breed using Watson Visual Recognition. And finally, it retrieves the dog breed name and broadcasts to all the user apps.

PubNub BLOCKS can also keep a track of the dog breed images uploaded over time and maintains an internal data store to keep track of the types of dog breeds.

Project Source

Take a look at the project source at these GitHub links. Based on the architecture diagram, the software infrastructure for this campaign is built and based on a Web UI. The source code is divided into two parts:

AppUI – User application that acts as an app to upload images and see the campaign in progress.

Block – The business logic code that runs in the PubNub BLOCK. It syndicates with the app and Watson Visual Recognition API to tag every image with the name of the dog breed and updates the campaign map.

To run this campaign, you will need a Node.js server to host the AppUI. You will also need to configure IBM Cloud Object Storage and Watson Visual Recognition (both available under Bluemix services) and PubNub BLOCKS. Refer to the README file for configuration steps to setup your Bluemix workspace and PubNub BLOCK.

Visit the Bluemix registration page and PubNub service page to begin. Both of the services offer a free tier account to play around with their offerings.

Launch the Campaign

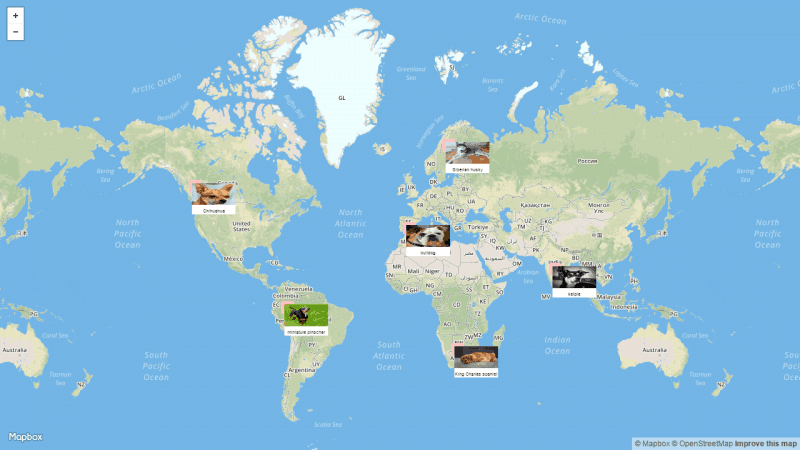

To launch the campaign, make sure that all the Bluemix services and the PubNub BLOCK are running and fire up your Node.js server to serve the campaign map to your browser. You can click anywhere on the world map and upload a dog image. This is to emulate a user’s action of taking a snapshot of a dog and uploading it along with the GPS location.

The entire workflow of this campaign is driven by the PubNub Data Stream Network. When the user uploads a dog image, the app initiates a data stream over a PubNub channel. Initially, this data stream contains only the location of the image and the user’s GPS location. But as it traverses through the BLOCK, the data stream is appended with the dog breed name and then broadcasted to all the other app instances.

The BLOCK intercepts the data stream, triggers the Watson visual recognition API to get the dog breed data and then appends that data to the stream followed by a broadcast to all the app instances.

Long Live the Dogs

You now have a worldwide campaign running. You can clearly see which dog breeds are still thriving.

One of the ways in which this campaign could help, apart from building a visual story, would be to derive some statistical data from images. For example, based on the user’s activity and the identified dog breed, you can estimate how many dogs of a particular breed could still be alive and predict the population density around different regions. That way, you can perhaps use this campaign to build a more enriching statistical analysis tool overlaid on the visual story.

Conclusion

Adding some zip to images is only one way to use the Watson Visual Recognition service. There are more interesting use cases where it an be applied to solve real world problems.

Whether you’re classifying different forms of an object or separating a good object from a bad one based on visual appearance, these are all serious use cases where an automated recognition process based on Watson Visual Recognition can help you optimize your processes in faster, better and more efficient ways.

Check out the Watson Visual Recognition Web site to explore more about its capabilities.