What is Big Data?

Big data refers to the huge accumulation, and an ever-growing heap of data, generated from diverse data sources within and outside an organization. Traditionally, organizations have always treated data in a siloed way, strictly partitioning them based on individual business functions. For example, data related to ERP was handled by the ERP system, and data generated from customer feedback were handled by the customer relationship teams. Big data management systems encompass several data sources, across multiple enterprise applications, business units, corporate functions, and external feeds, to build a larger canvas of information, and to derive better knowledge and wisdom for the business in general.

Building such a large data canvas is the need in a 21st-century enterprise, where data is generated not only within, but also as external traffic. This includes web applications, mobile apps, social media feeds, and the newer breed of API-driven applications. Such heterogeneous data traffic possesses valuable inputs with immense potential to be harnessed. Therefore, the term “Big Data” has evolved as a symbolic way to represent such a complex amalgamation of data. This data has characteristics of the four Vs, Volume, Velocity, Variety, and Veracity., Volume, Velocity, Variety and Veracity.

Early Approaches to Big Data Management: Handling Volume

One of the early indications that signify the transition from ordinary data to Big Data is the data handling capacity of a typical computer. When the influx of data exceeds the usual capacity of a computer’s CPU and memory to process it at a reasonable speed, it is safe to assume that one is dealing with a high volume of data.

Therefore, in many organizations, the initial approach to handle volume is to increase the hardware capacity, via vertical scaling. That is, adding more CPUs and memory to augment the processing capacity of the servers. Another alternative is to scale out. In this case, data processing workloads are distributed across multiple nodes in a network.

The scale-up option is easy, but it soon hits a ceiling. The scale-out option is better from a super scalability point of view. However, this approach requires additional protocols to orchestrate the parallel processing of data workloads across multiple computers.

Historically, two approaches evolved to address the growing volume of data: Massively Parallel Processing (MPP) and MapReduce.

Massively Parallel Processing (MPP) Architecture

MPP is a hardware-centric approach, wherein specialized hardware nodes are deployed, and tuned for CPU, storage, and network performance. MPP nodes follow a share-nothing philosophy and each node works independently with its own computing resources.

In an MPP-based architecture, the high-volume data is broken down into chunks distributed across nodes, in three primary ways. This distribution is done, either evenly, randomly, or based on a hash key.

Map Reduce Programming Model

Map Reduce is more of a software-based approach to processing high-volume data.

It is a programming model that can process data by distributing and parallelizing the tasks for faster processing. It has two phases:

- Map function – It processes a set of key-value pairs and generates a set of intermediate key-value pairs.

- Reduce Function – It merges all intermediate values associated with the same intermediate key.

Both MPP and Map Reduce can be used for Big Data processing, but the choice between the two will depend on the scaling requirements of the data processing task and the needs of the organization. However, it is also possible to leverage both approaches together, since any form of data processing involves both hardware and software-based optimizations. Therefore MPP and Map Reduce can address these requirements while working in tandem.

MPP and Map Reduce are based on batch processing. This was fine in the initial era of Big Data where processing was done for voluminous data at rest. The next complexity in Big Data arose in the form of the velocity of data.

Handling Data Velocity with Stream Processing

High-velocity data represents a continuous flow of events emitted from multiple sources that generate a realtime data stream. A surge of tweets or social media feeds, or user activity in an e-commerce site, are all examples of a realtime data stream. Handling streaming data requires a separate stream processing architecture.

Stream processing is used for mission-critical applications to analyze realtime and near realtime data from never-ending streams of events at massive volume and velocity. To achieve such scale, a stream processing architecture has a few specialized components:

- Message BrokerA message broker is an intermediary software component that facilitates communication and data exchange between different applications, services, or components in a distributed cloud computing environment. The message broker decouples the producers and consumers of messages to ensure reliable and scalable message processing. For more details, refer to the cloud... More: Stream processing is event-driven, where events are emitted from data sources and message brokers act as the stream processing engine forwarding the data to distributed processing endpoints.

- In-memory Data: Stream processing is a low-latency operation that relies on in-memory storage and computing for faster processing.

- Data Pre-Processing Pipelines: Stream processing is used for generating real-time insights through machine learning and business analytics. Therefore it must have highly optimized data pipelines that perform ETL (Extract, Transform, and Load) operations for data pre-processing.

Handling Variety in Data with Different Database Engines

Apart from dealing with volume and velocity, variety is another problem. Big Data systems are not designed to organically evolve with a specific data schema to address the needs of a specific application. They are designed to ingest data from different systems, which leads to the problem of a variety of data. As an example, an enterprise Big Data system supports integrations for extracting data from social media feeds, emails, usage reports, customer feedback, employee attendance, software logs, and so on. All these data sources have radically different structures. Some data, such as social media posts have no structure at all. Accordingly, the data can be categorized into

- Structured: Structured data includes data from traditional enterprise applications such as ERP, CRM, and standard APIs. The data in this format has a well-defined tabular schema and structure, and are mostly stored in relational databases.

- Semi-Structured: Semi-structured data doesn’t follow a conventional tabular structure, because it has no fixed schema. But it has tags or markers to specify semantic elements. Examples of semi-structured data formats are CSV, JSON, Email, HTML, and XML.

- Unstructured: Unstructured data does not conform to any data schema. Most of the data from the Web is unstructured. Some examples are multimedia files, social media streams, chats, maps, or any user-generated data.

For handling this plethora of variety, a Big Data system must integrate with multiple database engines, such as:

- Relational Database Management Systems (RDBMS): These databases use the relational model to organize data into tables with rows and columns, and are optimized for structured data, such as financial transactions and customer data. Examples include MySQL, Oracle, and Microsoft SQLSQL stands for Structured Query Language. It is a standardized programming language used for managing and manipulating databases. SQL is commonly pronounced as "sequel". The history of SQL dates back to the early 1970s when IBM developed the initial version to interact with IBM's relational database management system (RDBMS). Over... More Server.

- NoSQL Databases: These databases are designed to handle large amounts of unstructured or semi-structured data, such as social media posts, log files, and sensor data. Examples include Cassandra and Amazon DynamoDB.

- Key-Value Stores: These databases store data as key-value pairs, making them highly scalable and optimized for fast read and write access. Redis is one of the most popular key-value based stores.

- Document-Oriented Databases: These databases store data as documents, allowing for flexible and dynamic data modeling. Examples include MongoDB and Couchbase.

Apart from these, there are also more specialized databases that can handle a complex mix of all data structuring, such as columnar and graph databases.

How Much Data is Big Data?

There is no absolute measure for a specific quantum of data to be deemed as Big Data. However, it is obvious that one would like to think in terms of the number of bytes or multiples. This refers to the volume of data.

However, this volume is also a moving number. With each technological innovation driving the CPU cycles faster and storage costs lower, the number is only growing.

It is also contextually dependent on an organization’s requirements. For a small-sized company, 1 TB of data might be considered Big Data. For a large enterprise, this will be a few petabytes.

Irrespective of the quantum, it is safe to assume that any data that exhibits the four Vs and takes a substantial amount of resources to process and generate insights can be considered Big Data.

Essential Components & Reference Stack for Big Data Management System

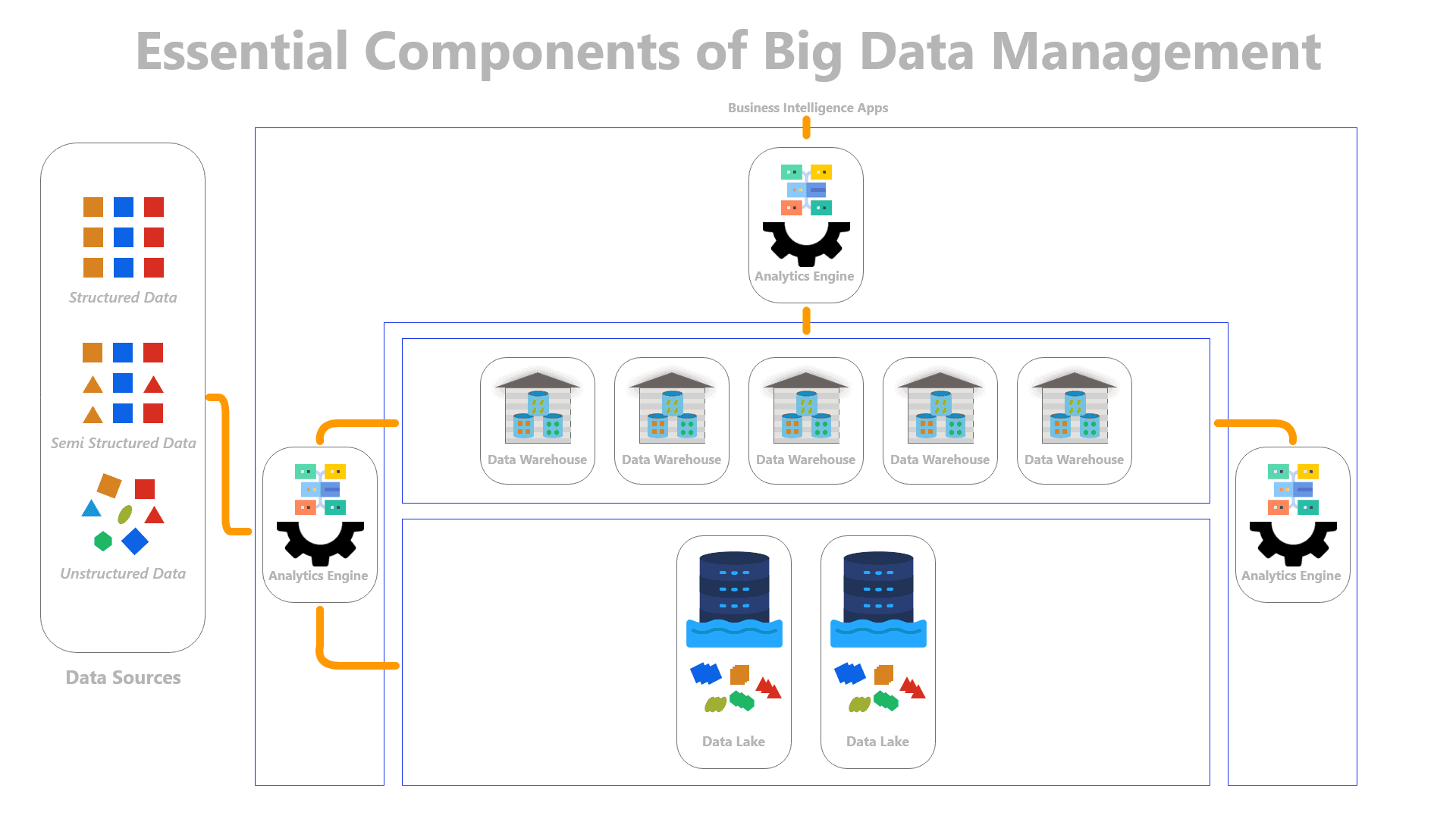

Any Big Data system has a few essential components that form the bedrock of managing the four Vs at scale:

Data Sources: Data Sources are the various databases and data stores where the data is residing, either in structured semi-structured, or unstructured form. This may not be treated as an internal component of a Big Data management system, as these data sources are typically under the control of separate enterprise applications. However, integration with the data sources is a vital part of making any Big Data system work.

Data Lake: A data lake is a centralized storage where data is stored in its native, raw formats. All types of data ranging from relational and non-relational data, IoT data, application, and social media data can be stored in a data lake. It is flexible to store data belonging to all structural forms and is optimized for data ingestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More and processing such that data scientists and analysts can easily access and process data, without the need for prior data modeling or transformation.

Data Warehouse: A data warehouse is a vast storage system that stores data collected from multiple disparate sources, that can be queried and analyzed instantly. Data warehouses are typically organized around specific business areas or subject domains, such as sales or customer information, and the data is usually transformed and aggregated to support specific use cases, such as reporting and analysis.

Analytics Engine: In the context of Big Data, an analytics engine is a software system or platform that is designed to process and analyze large volumes of data in real-time or near-real-time. It provides a way to extract insights and knowledge from massive and complex data sets. The analytics engine interfaces with the Data Lake and Data Warehouse to pre-process the data and run analytical workloads, including machine learning models to translate raw data into actionable insights.

A reference Big Data management stack can be envisaged based on a stacking arrangement of these components.

Reference Architecture for Big Data Management

Different Approaches to Big Data Management

Early Big Data architectures were created with the intention of making data available across an enterprise through centralized repositories. However, with the ever-changing business climate and architectural evolution, the data landscape became isolated primarily into two planes.

Operational Data Plane: The data that is responsible for running the business exists in the operational data plane. This data has a transactional nature and it serves the needs of the applications running the enterprise.

Analytical Data Plane: This data is analytical in nature and has an aggregated view of the facts, captured from the operational data planes. It is modeled to provide business insights and perspectives for the business development of the enterprise.

This division of data creates many issues. On one hand, transferring data between transactional and analytical systems leads to a lot of process inefficiencies in the form of time lags, and outdated data. Also, historically these systems have been designed with a technical user in mind, which makes it difficult for business users to operate independently. This situation has created a gap between business users and engineers and is a potential source of mistrust within the organization.

Big Data management systems have progressively evolved to bridge this gap in a few ways.

First Iteration: Application-Specific Data Warehouse

In the first iteration architecture, the two planes of data were bridged via a data warehouse.

This was the pre-Internet era of the 1980s and early 1990s when organizations started to recognize the need for a centralized repository to store and manage their growing heaps of data. At the time, most organizations used traditional database systems to store transactional data, but these systems were not designed to handle the growing volume and complex data analytics across a variety of data being generated. This led to the development of data warehousing as a solution to manage the vast amounts of data generated by organizations and to provide analytical capabilities to derive business intelligence.

The early Data Warehousing platforms were configured with a loosely defined ETL pipeline across data sources. However, these systems did not meet the demands of the growing volume of data. Moreover, they could not support Machine Learning workloads, since they require huge datasets for model training and validation. As a result, they were not quite conducive to Big Data handling. Nevertheless, this was just the beginning of Big Data revolution.

Second Iteration: Big Data Lake

The second iteration of Big Data architecture was designed with a data lake concept. Here, all the data from different applications were loaded and stored in a large central repository, the data lake. Moreover, a data lake also offered the flexibility to pre-precess the data before storing it in any of the structural formats. With the ability to store and manage vast amounts of data, data lakes provided a rich source of training data for the development of predictive models that could help organizations make better decisions. This was also extended to carry out more complex machine learning workloads.

This iteration had a Big Data ecosystem. However, it still relied on long-running batch processing jobs for moving data across data lakes and data warehouses. Therefore it was still considered inefficient for high velocity data.

Third Iteration: Data Lakehouse

This iteration leveraged an architectural approach to combine a data lake and data warehouse into a data lakehouse.

A data lakehouse provides a unified platform for data storage, processing, and analysis, making it easier for organizations to manage their data at scale. It offers better query performance, and cost savings compared to the architectures of previous iterations.

Combined with the stream processing capabilities, this architecture is also well-suited for handling realtime data.

Fourth Iteration: Flexible Cloud Deployment

The previous iterations of Big Data systems progressed towards cloud-based Big Data platforms that provide a fully managed and scalable solution for Big Data management and analysis. These systems provide the scalability, performance, and ease of use required to handle Big Data at scale, and are optimized for batch as well as realtime data processing.

A further trend of this iteration is to support a hybrid cloudA hybrid cloud represents a cloud deployment environment that combines a public cloud and a private cloud by allowing data and applications to be shared between them. A hybrid cloud architecture provides businesses with greater flexibility and more deployment options, allowing them to leverage the scalability and cost-effectiveness of public... More, and edge computing deploymentDeployment in software development refers to the process of releasing a software application or system into a production environment where it is made available for use by end-users. Deployment involves transferring the developed software from a development or testing environment to a live production environment, where it can be accessed,... More. In a hybrid cloudA hybrid cloud represents a cloud deployment environment that combines a public cloud and a private cloud by allowing data and applications to be shared between them. A hybrid cloud architecture provides businesses with greater flexibility and more deployment options, allowing them to leverage the scalability and cost-effectiveness of public... More, different components of the bid data management system are distributed across the public cloud and on-premises private cloud to create a single, integrated Big Data management system. By combining edge computing, the system can be architected to define custom workflows where data ingestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More, processing, and analysis happen on-premises for sensitive or realtime data.

These iterations of Big Data systems have paved the way for organizations to effectively manage and analyze large amounts of data, and have enabled the development of new and innovative data-driven applications and services.

The evolution of Big Data systems continues with more middleware components getting added to the based architecture to bring new and exciting capabilities for organizations to better manage and gain insights from their data.

Data Mesh

With many years of iterative improvements in Big Data management, there is one major problem that has sprung up. That is the centralized approach to managing data. This becomes a challenge for large organizations. Data Mesh is a relatively new approach to data management, wherein data is treated as a product, and data teams are organized as self-contained, cross-functional teams that are responsible for managing specific data products.

The core principles of data mesh include

- Domain-oriented decentralization: Data is owned and managed by individual domains, which are responsible for creating and maintaining data products tailored to their users’ specific needs.

- Data as a product: Data is treated as a product, with data teams acting as product teams that are responsible for managing the full lifecycle of their data products.

- Self-serve data infrastructure: Data infrastructure is managed as a shared service, with data teams responsible for creating and maintaining data platforms that are easy to use and enable self-service for their users.

- Federated governance: Data governance is distributed across domains, with each domain responsible for defining its own data quality standards and ensuring that its data products adhere to those standards.

The goal of a data mesh approach is to enable organizations to scale their data capabilities in a way that is more flexible, responsive, and efficient than traditional centralized approaches to data management. By empowering individual data teams to manage their own data products, and providing them with the tools and infrastructure they need to do so, data mesh aims to enable organizations to more effectively leverage their data assets to drive business value.

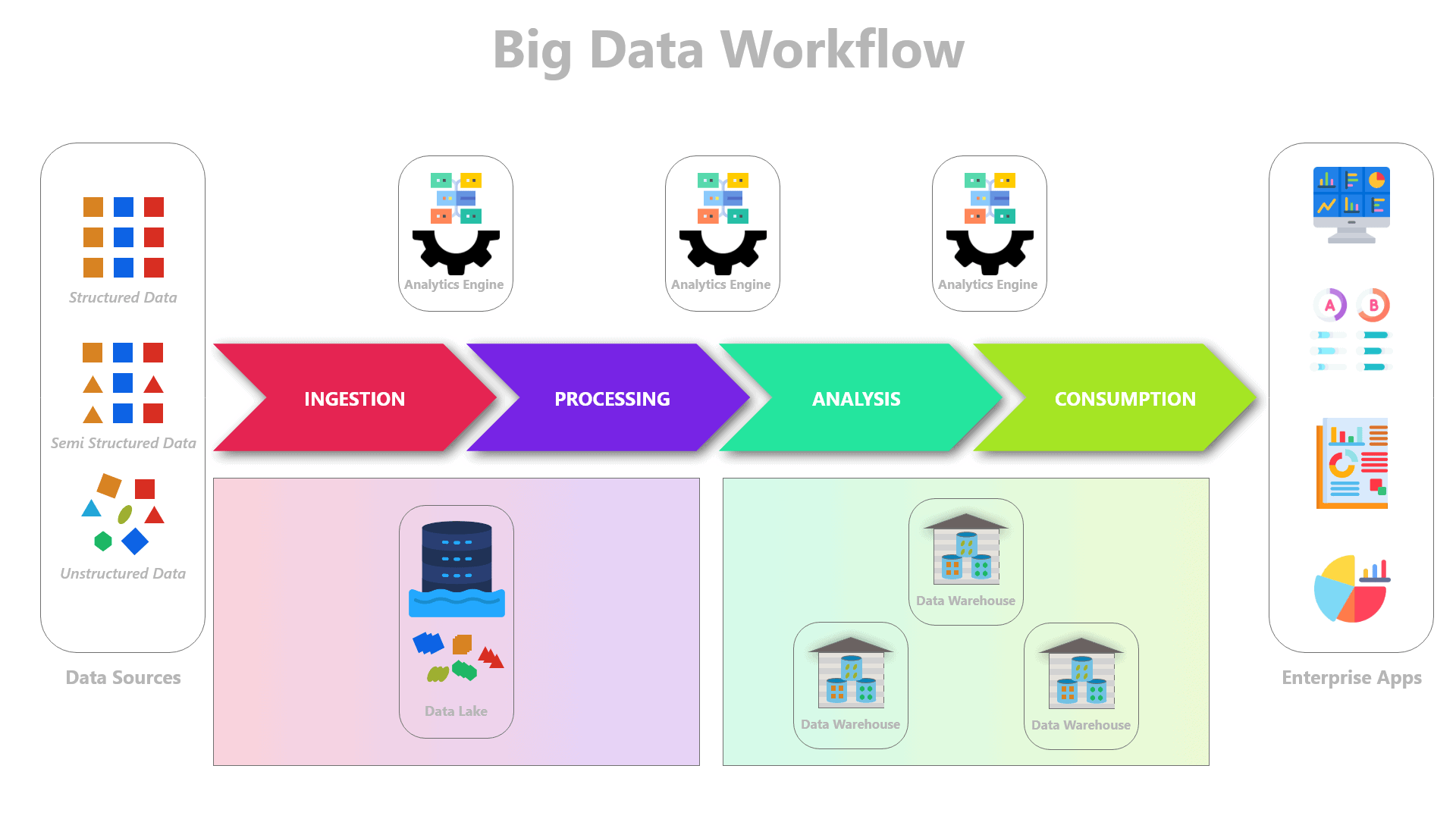

Reference Workflow for Big Data Management on the Cloud

Based on the reference architecture and essential components, a reference workflow for a Big Data management system can be envisaged as four phases.

Data IngestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More Phase: The Data IngestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More phase is responsible for the import and storage of data from various data sources, databases and various data stores. The end result of ingestion is to have a common repository of data, in the form of a Data Lake or a Big data storage system.

Different Ways of Ingesting Data in Big Data Systems

There are several ways to ingest data into big data systems, and the choice depends on the requirements of specific use cases, and business needs. Here are some common methods that are broadly employed for ingesting data in big data systems:

- Batch processing: Data is collected over a period of time and then ingested into the big data storage in bulk. This method is ideal for non-time-sensitive data processing and analytics tasks. Hadoop is one of the earliest tools used for batch processing of big data.

- Real-time/streaming processing: Data is ingested and processed as soon as it's generated, allowing for real-time analysis and decision-making. Real-time events emitted from applications and third-party sources are best handled using this ingestion method. It is suitable for time-sensitive applications, such as fraud detection and real-time analytics.

- Change data capture (CDC): This method captures changes in data sources (such as databases) and sends the changed data to the big data system. CDC allows for efficient and incremental data ingestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More without requiring a full data load.

- APIs and SDKs: Many big data systems and cloud providers offer APIs (Application Programming Interfaces) and SDKs (Software Development Kits) to programmatically ingest data into their systems. This allows developers to build custom applications without worrying about additional layer of code or configuration for data ingestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More.

- Data integration middlewares: These platforms provide a unified way to ingest data from various sources, transform it, and load it into big data management systems. They can handle multiple data formats and sources, making them suitable for complex data ingestionIn the context of big data systems, the term data ingestion refers to the process of acquiring or importing large volume of data, by transferring it from various sources to a centralized big data storage or processing infrastructure for further analysis. It is the first step in the data processing... More tasks.

- Web scrapingWeb scraping is the process of extracting data from websites. It involves using automated tools or scripts to gather information from web pages, typically in structured formats like HTML, JSON, or XML. Web scraping allows users to collect large amounts of data from multiple websites quickly and efficiently, for purposes... More/crawling: In this approach, data is collected from websites and web services by crawling and scraping the content directly from webpages. This method is useful for ingesting data from external sources like social media, news websites, or other public data sources. This method works in conjunction with one of the above approaches, but may not be advisable always, since it violates the terms of use policies of most websites.

Data Processing Phase: This phase is responsible for pre-processing the data, to convert it into a suitable form for further analytics. Several data pipelines are created to act on the raw data located in the Data Lake and sequentially transform it into a processed form via one or more Extract, Transform, Load (ETL) processes. Most of the data preprocessing happens within the Dat aLake itself, however, some of it may be shifted to a Data Warehouse.

Data Analytics Phase: Data Analytics phase is where advanced analytics computations are performed on the processed datasets to gain insights that deliver business value. These computations can vary from simple regressionRegression is a statistical method used to model the relationship between one or more independent variables (often referred to as "predictors" or "features") and a dependent variable (often referred to as the "target" or "outcome"). The goal of regression analysis is to understand and quantify how changes in the independent... More models to complex machine learning-based learning models. In the case of complex machine learning-based computations, all the data partitions containing the training, and testing datasets are hosted in the Data Warehouse.

Data Consumption Phase: This phase is where an additional layer of data is generated which provides insights from the Data Warehouse and serves as the input to the end-user applications, such as BI dashboards, data visualization, recommendation/prediction systems, alerting, and reporting engines.

Together, these four phases are stitched to create a seamless flow of data, via the essential components of the Big Data Management system

Reference Workflow for Big Data Management

Emerging Trends around Big Data Architecture

Data Observability

The whole purpose of managing Big Data is to ensure that the process of data analytics is smooth and progressively matures in delivering insights from data. But analytics is of no use if the quality of data going in is poor and unreliable.

Data observabilityObservability in the context of cloud computing and cloud operations refers to the ability to understand, analyze, and manage the behavior and performance of cloud-based systems and applications. It encompasses the collection, analysis, and visualization of telemetry data from various sources within the cloud infrastructure, enabling operators and developers to... More is a concept that refers to a set of tools and technologies that helps understand the health of data in an organization. It observes the lineage of data from source to consumption and uses machine learning to automatically monitor and detect data anomalies in an organization. In doing so, data observabilityObservability in the context of cloud computing and cloud operations refers to the ability to understand, analyze, and manage the behavior and performance of cloud-based systems and applications. It encompasses the collection, analysis, and visualization of telemetry data from various sources within the cloud infrastructure, enabling operators and developers to... More contributes to the agile data management of an organization, improving businesses, and maintaining and creating efficient products.

Data as a Service / Data as a Product

Data as a Service (DaaS) is a model of providing data on demand to users, applications, and systems over a network. In the DaaS model, data is treated as a product or service that is provided to customers on a subscription basis or on a pay-per-use basis.

The data provided through DaaS can come from a variety of sources, including public data sets, proprietary data sources, and data generated by sensors or IoT devices. In this case, the Big Data management system is architected in a special way to ingest and process all this data in a suitable form such that it can be made accessible via APIs or other web-based interfaces.

Data Mart

A data mart is a subset of a larger data warehouse that is designed to serve the needs of a specific department or business unit within an organization. It is a repository of data that is optimized for specific business functions or groups, such as sales, marketing, or finance.

Unlike a data warehouse, which contains a comprehensive set of data from across the organization, a data mart typically contains only the data that is relevant to a particular department or function. The data in a data mart is organized in a way that makes it easy for business users to access and analyze the data for their specific needs.

Data Fabric

Data fabric is a concept in the field of data management that describes an architecture or framework for managing and integrating large amounts of data from multiple sources.

Data fabric is designed to provide a unified and consistent view of data across different types of data sources, such as databases, data warehouses, data lakes, and other data repositories. It enables seamless access to data regardless of its location or format and allows users to work with data in a distributed and decentralized manner.

Data fabric aims to create a single, unified layer of data, to be consumed by different applications and users across the organization.