In the rapidly evolving business landscape, the advent of cloud technology has been nothing short of revolutionary. The cloud has not only changed the way we access information and data but has also transformed the very essence of how we communicate. In the not-so-distant past, most forms of communication technologies were tethered to legacy telecom infrastructure, limiting the scale, speed, and flexibility of user interactions. The cloud ushered in a new era of communication by offering scalable and on-demand virtual cloud services, acting as a giant telephone exchange over the Internet. This paradigm shift laid the foundation for a myriad of communication solutions.

Latest Posts on Cloud Communication

What is Cloud Communication?

Cloud communication refers to the use of cloud computing for providing various forms of real-time collaboration, and information-sharing use cases over the Internet. It encompasses a broad range of services and applications to facilitate seamless, scalable, and flexible communication experiences through voice, video, and messaging mediums.

The evolution of cloud communication traces its roots to the early 2000s when the cloud was primarily associated with offline collaboration tools such as data storage, email, and file sharing. However, these services were primitive since they did not offer any form of real-time voice or video based communication experience. At that time, these applications were offered with legacy communication technologies. The result was an integrated communication suite with call and conference features over the PSTNAlso known as Public Switched Telephone Network, it is the dominant telecommunications system of 20th century that uses the circuit-switching technology to make phone calls and transmit voice and data over copper wires or fiber-optic cables. PSTN encompasses an extensive network infrastructure, the equipment and and protocols necessary for landline... More, with add-on collaboration tools for email and file sharing over the Internet. This arrangement did not result in a seamless user experience. This situation paved the way for a series of innovations to build real-time voice and video capabilities over the Internet to be unified with all forms of collaboration tools.

Major Innovation Milestones for Cloud Communication

The kind of unified cloud communication services, with integrated voice, video, messaging, file, and screen sharing, that we are witnessing today, is a result of a series of technological innovations that pushed the boundaries of the Internet. It all started with the standardization of the RTP (Real-time Transport Protocol) which paved the way for transmitting voice and video payloads over the Internet. Here is a chronological sequence of the major milestones:

Standardization of RTP: The RTP was standardized by the IETFIETF or Internet Engineering Task Force is a non-profit, international community of network designers, operators, vendors, and researchers concerned with the evolution and operation of the Internet and the Internet's architecture. It is a standards organization that develops and promotes voluntary Internet standards, protocols, and technical specifications through an open,... More during the mid-1990s. The chief goal was to enable the real-time transfer of streaming media payloads over the standard TCP/IP stack, which formed the foundation for the Internet. This standardization effort superseded an earlier standard named Network Voice Protocol (NVP) which was conceived in the 1970s, but at that time, the underlying packet communication technology wasn’t mature enough to be able to carry media payloads with sustained quality of service. RTP was later extended to build a family of specialized protocols such as RTSP (Realtime Streaming Protocol) and RTMP (Realtime Messaging Protocol).

Standardization of Signaling Protocols and Emergence of Voice over Internet (VoIPVoice over Internet Protocol, or VoIP, is a technology that enables voice communication and multimedia sessions over the internet rather than traditional telephone lines. It relies on a set of protocols like RTP (Realtime Transfer Protocol), SIP (Session Initiation Protocol) and a few others, to route media payloads after converting... More): The RTP standardization was followed by a series of signaling protocols that were designed for managing media sessions. A session represents a unique stream of media payloads being carried over the IP network from one end to another or broadcast across many endpoints. To enable voice/video calls and conferencing use cases, a few session-centric protocols were introduced, namely SIP (Session Initiation Protocol), SAP (Session Announcement Protocol), and SDP (Session Description Protocol). Apart from IETFIETF or Internet Engineering Task Force is a non-profit, international community of network designers, operators, vendors, and researchers concerned with the evolution and operation of the Internet and the Internet's architecture. It is a standards organization that develops and promotes voluntary Internet standards, protocols, and technical specifications through an open,... More, the ITUITU or International Telecommunication Union is a specialized agency of the United Nations (UN) responsible for coordinating global telecommunications and information and communication technology (ICT) issues. It was established in 1865 and is headquartered in Geneva, Switzerland. The ITU is one of the oldest international organizations and serves as the... More also came up with a suite of signaling protocols, H.323 for call and session signaling. The advent of these signaling protocols led to the emergence of VoIPVoice over Internet Protocol, or VoIP, is a technology that enables voice communication and multimedia sessions over the internet rather than traditional telephone lines. It relies on a set of protocols like RTP (Realtime Transfer Protocol), SIP (Session Initiation Protocol) and a few others, to route media payloads after converting... More technology which provided the initial catalyst for building Internet telephonyInternet telephony is a concept or a trend that refers to the use of Internet infrastructure for providing telephone services, offering cost-effective, flexible, and feature-rich communication solutions for individuals, businesses, and organizations of all sizes. It is synonymous with Voice over Internet Protocol (VoIP), the key technology behind the adoption... More systems for business.

Development of WebSocket: WebSocket is a communication protocol that provides full-duplex communication channels between a web browser and a web server. It alleviates the limitations of HTTP to enable bidirectional, real-time communication allowing data to be exchanged simultaneously from both ends without the need for multiple HTTP requests/responses. It was standardized by IETFIETF or Internet Engineering Task Force is a non-profit, international community of network designers, operators, vendors, and researchers concerned with the evolution and operation of the Internet and the Internet's architecture. It is a standards organization that develops and promotes voluntary Internet standards, protocols, and technical specifications through an open,... More around 2011 and soon witnessed widespread adoption for real-time data streaming applications such as online gaming, financial trading, chat, and collaboration apps. Although WebSocket was not meant for carrying real-time voice and video payloads, it was a good candidate for building an alternative signaling protocol to SIP for establishing realtime communication over the Web.

Evolution of Web Real-Time Communication (WebRTC): During the Web 2.0 era, it was increasingly felt that all software applications could be delivered via the web browser. This was the result of a new application deploymentDeployment in software development refers to the process of releasing a software application or system into a production environment where it is made available for use by end-users. Deployment involves transferring the developed software from a development or testing environment to a live production environment, where it can be accessed,... More strategy popularly known as SaaS (Software as a Service). However, in the case of delivering real-time communication applications over web browsers, additional plugins, third-party softphone applications, or even phone devices were needed to make calls. This posed a limitation in enabling a unified, web-based audio/video collaboration and created the necessity for a new W3C standard known as WebRTC. This was an open-source project that brought real-time communication capabilities directly to web browsers enabling video and audio communication within web applications without the need to install third-party plugins.

Applications of Cloud Communication

All these technological milestones played a crucial role in orchestrating a few practical use cases of cloud communication, which can be broadly categorized into following applications:

Media Streaming: Media streaming refers to the process of delivering multimedia content, such as audio, and video, over a network in a continuous stream to be consumed in real-time by multiple end-users. It leverages RTP or similar protocols to stream and play multimedia content without waiting for the entire media content to be downloaded. More specialized use cases of media streaming include live streaming, on-demand streaming, and peer-to-peer streaming.

Data Streaming: Data streaming refers to the continuous and real-time transmission of data from a source to a destination over a network. Unlike traditional batch processing, where data is transmitted in large batches or files, data streaming allows for the continuous transmission of chunks of data belonging to a large dataset. This approach also enables continuous ingestion, processing, and analysis of data. Data streaming has found immense adoption in online collaboration, real-time analytics, and mission-critical applications that require continuous real-time data exchange.

Unified Communication as a Service (UCaaS): As internet speeds accelerated over the last few decades, and the demand for seamless collaboration grew, Unified Communication as a Service emerged as a game-changer. A UCaaS platform wraps the traditional peer-to-peer media communication and VoIPVoice over Internet Protocol, or VoIP, is a technology that enables voice communication and multimedia sessions over the internet rather than traditional telephone lines. It relies on a set of protocols like RTP (Realtime Transfer Protocol), SIP (Session Initiation Protocol) and a few others, to route media payloads after converting... More services into a unified platform to offer various communication tools based on voice, video, and messaging. All of this is packaged as a single cohesive software offering for businesses and end users with extensions to incorporate additional components such as IP PBXs, media gateways, and IP softphones.

Communication Platform as a Service (CPaaS): CPaaS emerged as a natural successor in the evolution of cloud communication by embracing a more modular, approach. It leverages the foundations laid by UCaaS and WebRTC and goes a step further to let businesses customize their workflows to incorporate communication features within any software or web application, through a programmable interface. This opens up new possibilities for innovation and use cases. With CPaaS, businesses can design their product to embed communication facilities offered by a CPaaS provider and offer voice, video, or message-based communications as part of their product’s core user experience.

Other Innovations Enabling Cloud Communication

While the technological milestones described above played a crucial role in shaping today’s cloud communication ecosystem, it is worth mentioning that two more innovations made it possible to bring cloud communication to the fore as we are witnessing today. These are, the invention of codecs, and the concept of API.

The invention of various audio and video codecs made it possible to compress the media payloads so that they could be accommodated and transmitted across a packet switched network like the Internet. This was a major achievement since packet switching was inherently not designed to carry continuous media payloads, like in the case of the traditional telephone network based on the earlier circuit switching technology.

The concept of API and API management was an important development in making the web more accessible and customizable for the users. An API offers a programmable interface to allow application developers to control the functionality of a software application through a set of data exchanges using HTTP methods. In the context of cloud communication, these functionalities are offered as programmable features that enable audio/video calls, collaboration, message exchanges, screen sharing, and a host of other custom and value-added services, controlled via APIs.

The As as Service Models of Cloud Communication

UCaaS and CPaaS have emerged as the most dominant forms of cloud communication service offerings today. In many cases, they are integrated to offer a certain subset of cloud communication features as per their targetted use cases. Integrating these two services provides a comprehensive solution that combines programmable communication capabilities with standardized communication tools. Some of the benefits of this integration include:

A uniform cloud communication platform that offers an end-user communication and collaboration suite along with programmable capabilities and API integration to embed any form of communication within any software.

Integration with traditional mobile and telephone networks to access SMS or voice capabilities and provide additional options for reaching users.

Customization of communication workflows to build tailored communication solutions that align with specific business processes and requirements.

Use Cases of UCaaS

Some of the typical use cases of UCaaS include:

- Basic Communication: Standard online communication tools, including voice calling, video conferencing, instant messaging, and presence.

- Collaboration: Features like file sharing, screen sharing, and collaborative document editing for enhancing teamwork and productivity.

- Integration with Productivity Apps: Integrations that support popular productivity applications, such as email clients and calendars, to streamline communication within existing workflows.

- Unified Messaging: Integration of voicemail, email, and other messaging formats into a single, unified interface.

- Mobile and Remote Accessibility: Support for mobile and remote accessibility, allowing users to stay connected from anywhere.

UCaaS focuses on providing a comprehensive suite of communication and collaboration software to enhance internal communication and productivity within organizations. The target audience for UCaaS is the end user who uses these software sites.

Use Cases of CPaaS

Basic CPaaS use cases are:

- In-app SMS and Voice Calling: APIs for sending and receiving SMS messages and making voice calls programmatically within an app.

- Built-in Video Calling: APIs for integrating real-time video communication into applications, enabling features like video conferencing and collaboration.

- Embedded Chat: APIs for implementing chat functionality, allowing developers to integrate real-time messaging features within their apps.

- Portal Integration: Support for Web Real-Time Communication (WebRTC), allowing browser to browser audio and video communication and messaging.

CPaaS focuses on providing developers with tools and APIs (Application Programming Interfaces) to build custom communication applications and integrate real-time communication features into their existing software applications. CPaaS targets developers, software companies, and businesses that want to enhance their application’s user experience with real-time communication features, such as in-app voice calling, messaging, or video conferencing, without building these features from scratch.

Reference Architecture for Cloud Communication Offerings

Media Streaming

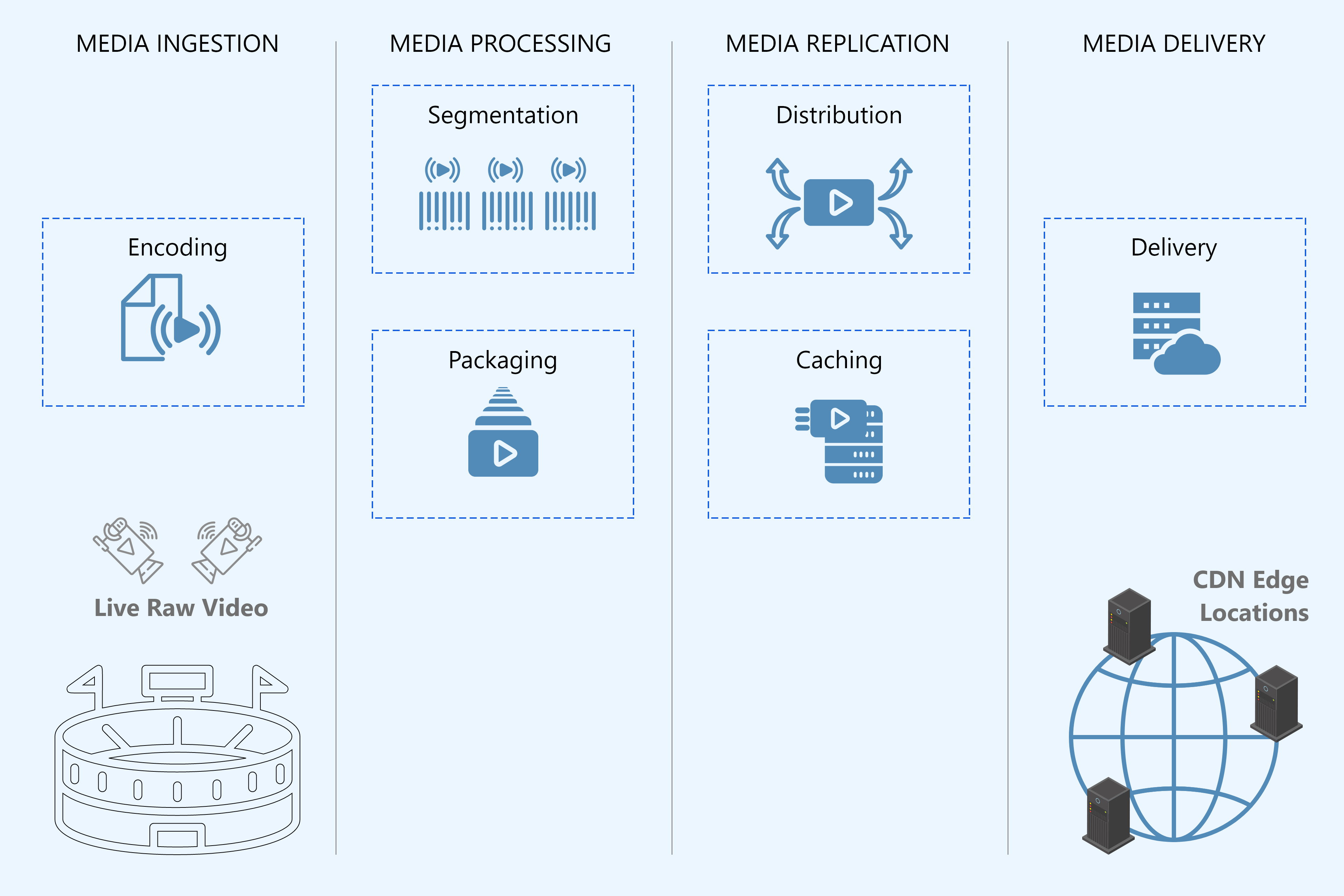

Let’s look at the main logical phases involved in implementing a live media streaming service.

Logical Phases in Media Streaming

Media Ingest Phase: This phase is responsible for managing the media pipeline that ingests the media content, performs encoding, and metadata generation, and also manages the media storage.

This phase converts live media to a digital format. It involves capturing the raw media stream, compressing and converting it into a format optimized for streaming over the internet, such as H.264 for video and AAC for audio. The metadata is created in a searchable format to query information related to the media such as titles, descriptions, genres, and ratings. The storage acts as a master repository for the raw media files for on-demand streaming in the future.

Media Processing Phase: This phase is responsible for processing the digital format of the live media stream and making it ready to be streamed. Broadly, there are two steps in this processing, segmentation and packaging.

Segmentation divides the stream into smaller chunks. Each chunk is an individual segment containing a very short duration of the media content, usually a few seconds in length. After segmentation, the individual media segments are packaged into a format suitable for delivery over the Internet. Considering HLS (HTTP Live Streaming) Protocol, which is the most prevalent protocol used for live streaming, the segmented media is contained within a ‘.ts’ file format also known as MPEG-2 Transport StreamMPEG-2 Transport Stream (MPEG-2 TS or simply TS) is a standard format for the transmission and storage of audio, video, and data streams. It is part of the MPEG-2 suite of standards developed by the Moving Picture Experts Group (MPEG) and is widely used in broadcast, cable, satellite, and digital... More. This packaging process involves hostingHosting, in the context of digital media, refers to the process of storing, serving, and managing digital content or resources on a server that is accessible over the internet. In the context of web or software application development, hosting refers to the process of launching the application source code or... More the segmented media and creating media playlists in the ‘.m3u8’ format that contain URLs for the individual segments.

Replication (or Distribution) Phase: This phase involves an orchestrated process of distributing the media streams to a network of servers responsible for delivering the media streaming service to various geographical locations closer to the end users. Collectively this is known as CDN, or the content delivery network. This replication ensures that the content is always cached closer to end-users, reducing latency and improving delivery performance.

Media Delivery Phase: This phase also involves the CDN and is optimized for media delivery to the end user from various geographical locations closer to them. There are multiple kinds of servers involved in media delivery. The core servers are part of the replication and caching phase and are responsible for CDN ingestion to host local copies of the media segments. The edge servers serve the end users. When an end-user requests to watch the live stream, their DNS resolver resolves the domain name associated with the CDN to an IP address of the nearest edge server.

The orchestration between the core and edge servers of the CDN involves synching the playlists, maintaining cached copies of the media, and performing additional transcoding to serve content with specific bandwidth or quality constraints. CDNs employ intelligent caching algorithms to determine which content to cache at each edge server based on factors like popularity, and geographical demand. There are also additional servers like load balancers, and proxy servers to manage the scale and load on the CDN network due to uneven end-user requests arriving from specific locations.

Supplementary Components

To ensure that the logical phases are well managed and run smoothly, there are a few additional components that play an important role in a media streaming service.

- Media Servers: Media servers are employed in several places across the live streaming phases to ensure the optimal deliverable quality of the stream output. The deliverable quality varies across devices, and platforms and is also dependent on technical parameters such as resolution, and bandwidth. Media servers perform transcoding to repackage live streaming content into different delivery formats to support playback on various devices and platforms based on changes in the resolution, bitrate, and bandwidth constraints. In addition, media servers also provide media-related functions such as media manipulation (e.g., voice stream mixing) and playing of tones and announcements.

- Content Discovery Portal: This component is responsible for maintaining a searchable catalog of available content for streaming. It also contains algorithms and systems for recommending relevant media content to users based on their viewing history, preferences, and behavior. All this is encompassed within an event lifecycle management layer that manages the lifecycle of live events, including event creation, scheduling, promotion, monitoring, and archival.

- Use Management Subsystem: This subsystem is responsible for overall user management including authentication of users' identities and authorization of their access rights to the streaming service. This includes user registration, login/logout functionality, and management of user profiles. It also manages the user subscriptions and payment plans, including subscription upgrades/downgrades, billing cycles, and payment processing.

Digital Rights Management Subsystem: One important aspect of live media streaming is digital rights management. That's the role of a separate dedicated subsystem to secure content across various devices and platforms, by issuing licenses to end-user streaming platforms. Additionally, this subsystem may employ additional processing to safeguard the content from unauthorized access. These include content encryption, concurrent stream management, and geo-enforcement.

Monitoring and Analytics Subsystem: This is a passive subsystem that captures the streaming performance metrics, including video quality, buffering, playback errors, and latency. It also monitors user engagement analytics such as watch time, session duration, content consumption patterns, and user interactions with the service.

Client Application Subsystem: This subsystem consists of the various client applications or devices used by the end-users to access the streaming service. Examples of client applications include web portals, mobile apps, smart TVs, and streaming media players.

Data Streaming

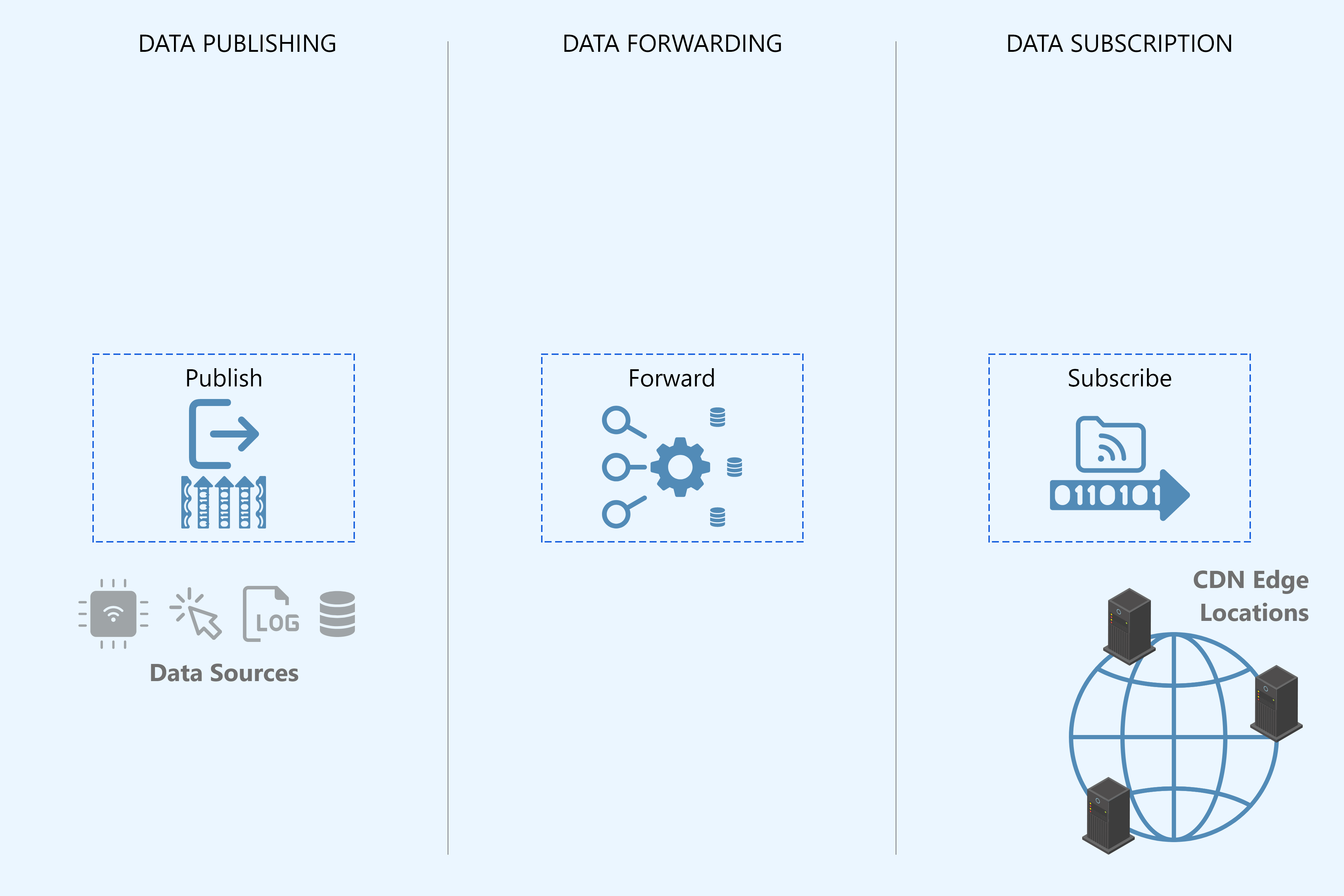

Here is the phase-wise reference view of a data streaming service network.

Logical Phases in Data Streaming

- Data Publishing Phase: This phase marks the ingestion of streaming data from various sources and publishing it over the Internet. The various sources comprise systems such as IoT devices, application logs, databases, social media feeds, and clickstreams.

- Data Forwarding Phase: This phase implements a routing logic to forward the published data to the destination. The routing logic is implemented by a global orchestrated network of message brokers to intelligently forward the data streamers to destination locations wherever it is going to be consumed during the data subscribe phase. Internally, this phase also relies on a CDN to replicate and cache the data across various edge servers across the globe where the streamed data is expected to be consumed during the data subscribe phase.

- Data Subscription Phase: This phase in data streaming architecture typically refers to the process of subscribing to a data stream or topic to receive real-time data updates. As part of this phase, the end-user application receives the streamed data from its nearest edge location where the data was earlier forwarded.

The reception of streamed data and subsequent real-time updates can only happen if the end-user application had earlier initiated a subscription request to receive a specific data stream, identified by a unique identifier or a topic name. Only upon confirmation of subscription, does the application start receiving the streamed data published on that identifier. This is orchestrated by the message brokerA message broker is an intermediary software component that facilitates communication and data exchange between different applications, services, or components in a distributed cloud computing environment. The message broker decouples the producers and consumers of messages to ensure reliable and scalable message processing. For more details, refer to the cloud... More and the CDN components of the forwarding phase.

This phasewise reference architecture follows the publish-subscribePublish-subscribe (also known as Pub-Sub) is a communication or messaging pattern used in distributed systems, where messages are transported from senders (publishers) to receivers (subscribers) in a de-coupled manner such that they are not aware of each other. Instead, the sender categorizes published messages into channels and subscribers express interest... More design pattern which is the most dominant architectural pattern used to implement global-scale data streaming networks. In a publish-subscribePublish-subscribe (also known as Pub-Sub) is a communication or messaging pattern used in distributed systems, where messages are transported from senders (publishers) to receivers (subscribers) in a de-coupled manner such that they are not aware of each other. Instead, the sender categorizes published messages into channels and subscribers express interest... More (pub-sub) messaging model, data producers (publishers) publish messages to topics or channels, and data consumers (subscribers) receive messages from these topics. Publishers and subscribers are decoupled from each other by the forwarding phase, allowing for scalable and flexible communication between different components of a distributed data streaming service.

Since data streaming deals with high velocity data which is one of the characteristics of big data, many data streaming services also serve as an intermediary data processing engine for big data management. In that case, the data streaming service has a few additional subsystems.

Data Processing Subsystem: This subsystem incorporates something known as a CEP (Complex Event Processing) engine. It is a specialized software system designed to analyze and process data streams in real-time to identify patterns, correlations, events, and relationships across multiple sources. It provides additional capabilities to detect and respond to significant events or conditions as they occur, facilitating timely decision-making and automated actions.

Integration Subsystem: This subsystem enables seamless integration with other data systems, databases, analytics platforms, and visualization tools. This subsystem holds critical importance since most data streaming services do not provide advanced or custom data processing capabilities, and therefore have to offload the data streams to a third-party processing engine to extract insights.

These subsystems mostly operate within the forwarding phase and subscription phase. Apart from these, these are also the important subsystems that take care of administrative aspects around use management, analytics, reporting, and billing, similar to the case of media streaming.

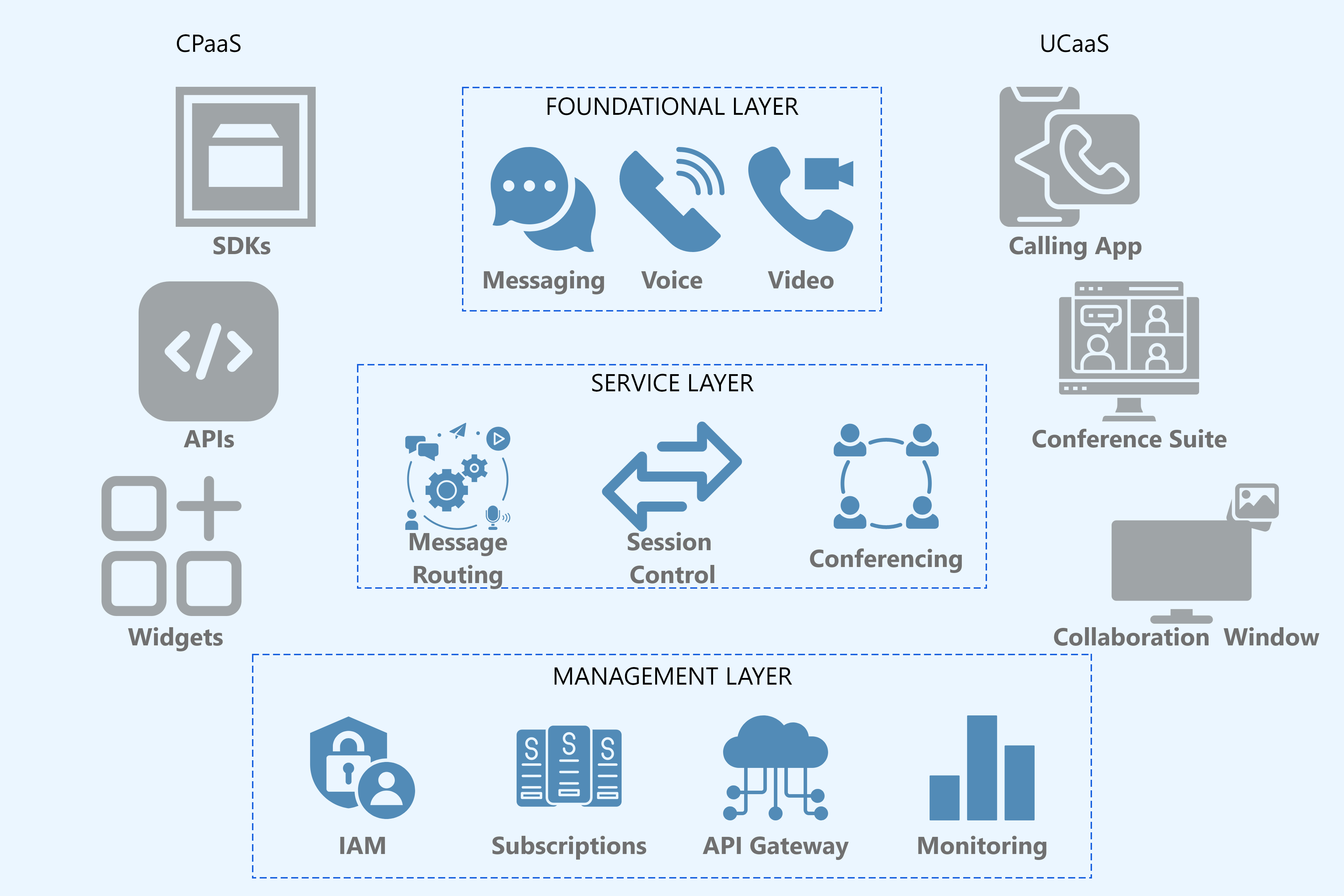

UCaaS & CPaaS Architecture

UCaaS and CPaaS have a similar architecture which mostly differs in the endpoint interfaces for accessing the communication services. In the case of UCaaS, these endpoints are software applications, whereas in CPaaS, these are APIs.

Here are the main layers of any UCaaS/CPaaS architecture:

Foundational layer

The foundational layer consists of the signaling subsystem for initiating and managing voice/video call sessions and delivering messages. In most cases, the signaling protocol is based on WebRTC or SIP.

WebRTC is the preferred option for peer-to-peer, web-based voice or video calling services. It is a fast and efficient protocol and is backed by a standards-based implementation across all web browsers. SIP follows a client-server model, wherein where SIP endpoints (e.g., softphones, IP phones) communicate with SIP servers (e.g.,) to establish and control call sessions.

The main subsystems of the foundational layer are

- Signaling Subsystem: This subsystem is responsible for orchestrating the call session established between two or more parties using one of the signaling protocols. Depending upon the protocol, the composition of this subsystem varies. In the case of WebRTC, the hosted domain of the WebRTC server acts as the orchestrator to initiate and manage the call sessions. Additionally, for bypassing NAT, STUN and TURN servers are used. In the case of SIP, there is a standardized signaling architecture that consists of a set of SIP proxy servers, SIP registrars, and session border controllers to orchestrate the call sessions. Unlike WebRTC where the web application’s domain server acts as the orchestrator to route the calls over the Internet, SIP follows the traditional telephone exchange based hierarchical system for call routing across local and remote locations. Therefore SIP is the preferred option for calls extending out the Internet, to the traditional landline or mobile network, which is enabled by an additional component within this subsystem, known as the SIP trunking gateway.

- Media handling Subsystem: In a peer-to-peer call session, the media payloads are directly exchanged between the endpoints, once the signaling is established and media parameters are agreed upon. In such cases, there is no intermediary media serverIn the context of media streaming services or Communications Platform as a Service (CPaaS), a media server refers to a specialized server or software component responsible for performing certain processing tasks on multimedia data streams, such as audio, video, and other media content, during real-time communication sessions or streaming sessions.... More involved. However, in the case of complex call sessions like conference calls with multiple participants or transcoding between different audio/video codecs, a media serverIn the context of media streaming services or Communications Platform as a Service (CPaaS), a media server refers to a specialized server or software component responsible for performing certain processing tasks on multimedia data streams, such as audio, video, and other media content, during real-time communication sessions or streaming sessions.... More may be used to mix process or bridge media streams. Media servers can perform functions like mixing audio streams from multiple participants, converting between different audio/video codecs, or adding features like echo cancellation, noise reduction, or volume normalization.

Service Layer

The service layer in UCaaS or CPaaS refers to the software layer that extends the foundational communication services and capabilities to applications, users, and devices. It serves as the middleware that sits between the underlying infrastructure (network, servers) of the foundational layer and the communication endpoints or application layer, facilitating communication and collaboration across various channels and devices.

The main subsystems of this layer are

- Session Control Subsystem: This subsystem manages the sessions. In a real-time voice/video call, such control functions include initiation and termination procedures for calls, call routing, signaling, and media negotiation.

- Message Routing Subsystem: This subsystem manages message routing for data, allowing users to exchange chat, text messages, multimedia messages, and files. It merges the various communication channels such as instant messaging, group chat, presence indication, message delivery status, and message synchronization across multiple devices.

- Conferencing Subsystem: The conferencing subsystem enables multi-party audio and video conferencing, allowing users to participate in virtual meetings, webinars, and collaboration sessions. It manages these multi-party sessions to enable features such as conference scheduling, invitation management, participant management, media mixing, and conference recording.

Additionally, this layer also manages additional collaboration functions such as presence, file transfer, screen sharing, and session recording.

Management layer

The management layer consists of the following subsystems

- Identity and Access Management (IAM) Subsystem: The IAM subsystem manages user identities, authentication, authorization, and access control for communication services. It ensures secure and controlled access to communication resources, applications, and data, protecting against unauthorized access and data breaches.

- API Gateway Subsystem: The API gateway subsystem provides a unified interface for endpoint devices to access the communication services ( in the case of UCaaS) and developers to access communication services through APIs (in the case of CPaaS).

- Subscription Management Subsystem: The Subscription Management Subsystem is responsible for managing customer subscriptions, including plan selection, activation, upgrades, downgrades, and cancellations. It provides self-service portals or APIs for customers to view and manage their subscription details, modify service plans, and update billing information. This subsystem also has an extensive set of modules for handling billing, invoicing, payment processing, revenue management, and integration with ERP and other subsystems.

- Monitoring Subsystem: This subsystem collects, analyzes, and reports on communication usage, performance, and quality of service metrics. It provides insights into communication patterns, user behavior, system health, and service availability, enabling administrators to optimize system performance and troubleshoot issues.

Prepackaged Application Services

Contact Center Platforms

A contact center refers to a cloud-based software platform that enables organizations to manage inbound and outbound communications with customers across multiple channels, such as voice, SMS, email, chat, and social media. These solutions are built on top of CPaaS providers' infrastructure, which offers programmable APIs and communication building blocks to enable real-time communication capabilities. It is also known as CCaaS (Contact Center as a Service).

The user interface of these platforms is designed to onboard agents to manage customer interactions across all supported channels. The interface typically includes features such as call controls, message threading, customer context, knowledge base access, and collaboration tools. In this age of AI, many contact center platforms also incorporate automation and self-service capabilities to streamline customer interactions and reduce agent workload. This may include interactive voice response (IVR) systems, chatbots, virtual assistants, and automated workflows for common inquiries. These platforms also offer Incoming communications to route customer calls to the appropriate agents or teams based on predefined routing rules. Intelligent routing algorithms consider factors such as agent availability, skill sets, customer history, and channel preferences to ensure efficient handling of inquiries.

Omnichannel Digital Marketing Platforms

Omnichannel digital marketing platforms offer businesses the ability to engage with customers across multiple channels while leveraging communication APIs and infrastructure provided by CPaaS or UCaaS providers. These platforms are designed to offer custom communication modes to engage with customers, such as:

Multi-Channel Messaging: Support for sending personalized messages across various channels, including SMS, MMS, email, voice, push notifications, social media, and in-app messaging, with additional support for two-way message exchange.

Bulk Messaging: Support for sending large volumes of messages simultaneously to a customer base across multiple channels, including SMS, email, voice, and push notifications.

Voice Broadcast: Bulk voice messaging enables businesses to deliver pre-recorded voice messages to customers' phone numbers. This can be used for announcements, reminders, surveys, and interactive voice response (IVR) systems.

In addition to the communication features, such platforms also have supplementary features for marketing campaign management, including tools for designing, scheduling, and managing campaigns across multiple channels. This includes A/B testing, campaign tracking, and performance analytics. Yet another important aspect of campaign management is the contact list of customers, leads, or prospects. Therefore, tools to manage customer contact information, segment audiences, and maintain a centralized database of customer profiles and preference is also part of the platform.

For better interoperability and programmability, these platforms also extend these capabilities to third-party applications via APIs and SDKs.

Legacy Integrations

Integration with telecommunication infrastructure for provisioning local, toll-free, or virtual phone numbers in multiple countries or regions is a common requirement for businesses setting up offices in various geographies. Fullstack UCaaS/CPaaS platforms enable such integrations to establish a global presence and facilitate international communication across the Internet and traditional mobile/PSTN networks.

Automation

Thanks to the advancement in AI, cloud communication services are embracing many of its virtues to offer standout features, such as:

- AI-Powered Video Analytics: Automatic video analysis to perform facial analysis of the person to detect demographic parameters and emotional state.

- AI-Powered Speech Recognition: Automatic speech recognition (ASR) technology that converts spoken language into text, enabling transcription, voice commands, and voice-to-text functionality in collaboration applications.

- Interactive Voice Response (IVR): Interactive voice response systems that enable automated phone-based interactions with callers, guiding them through menus, collecting input, and routing calls to the appropriate destination.