When Marc Andreessen emphasized the phenomena of “software eating the world”, he referred to the countless industries getting disrupted by software-based applications. Cloud computing is a crucial technological breakthrough that allowed such a leap. It shattered the physical, geographical, and economic constraints of launching a software product.

To understand cloud computing, it is important first to address the evolution of cloud computing and understand how it fulfilled the needs of different enterprises (big and small). This requires an understanding of the fundamental cloud infrastructure, which is the central theme of this article. A study of how cloud infrastructure serves the needs of users and applications is also presented, along with further trends that make cloud computing appear like magic.

Latest Cloud Infrastructure Use Cases

Use Case: Deployment Risk Assessment

Use Case: GPU Virtualization

Use Case: Cloud Data Warehouse

Use Case: Database Access in Serverless

Use Case: Instant Cloud Function

Use Case: Infrastructure as Code

Use Case: Device Targeting

What is meant by Cloud or Cloud Computing?

The term “Cloud” was presumably coined to describe the assorted set of computers connected to a network as part of a distributed computing system. Historically in the technical literature on computer networking, a line cloud has been used to illustrate a complex network. As a result, the term “Cloud” and “Cloud Computing” gained relevance in various internal and external industry forums represented by the computer, communication, and Internet companies between the periods 1995 to 2005. Most notably, this term was mentioned by former Google CEO Eric Schmidt during a conference in 2006, which is believed to be the reason for its subsequent popularity.

The basic idea of Cloud Computing represents an array of servers deployed in a distributed way over the Internet. These servers work as a cohesive system that provides the computing power to run the software, which can serve the end users via applications and services.

Evolution of Cloud Computing

Before getting into the intricacies of cloud infrastructure, let us briefly review the journey of computing infrastructure, which led to the present-day concept of cloud computing. The first so-called notion of a cloud computing system evolved in the form of mainframe computers during the 1960s. They were big, bulky, and expensive computers that only big corporations could afford. These were standalone computers for performing specialized computing jobs, and users had to log in through remote terminals to access the applications on a timesharing plan.

Subsequent advancements in computer hardware led to several scaled-down versions of mainframe computers. These were less powerful than a mainframe's capacity and power, hence categorized as mid-sized Mainframe computers, ultimately known as mini computers. The term mini does not imply the miniaturization of computers into desktops, laptops, or palmtops, as witnessed in the current era, which dawned with the advent of personal computers.

The early evolution of cloud computing started as distributed computing systems, where multiple minicomputers were connected over a network to foster better sharing of resources and data. With the advent of standardized LAN hardware interfaces in the 1980s, personal computers were also added to the distributed computing infrastructure, and they eventually took over and replaced the minicomputers. That’s because personal computers exhibited much more power on a compact form factor due to advancements in microprocessor technologies and the availability of cheap and commoditized hardware components, which could be assembled into a compact system, small enough to be kept on a desk.

Today's cloud infrastructure is the improvised and perfected distributed systems of the yesteryears, refined through several iterations over decades and systematically replaced by compact server hardware managed by a software layer. Additionally, the timesharing technique of older Mainframe era computers has been revolutionized as a new Software-as-a-Service (SaaS) model, wherein the software layer hosts a service that is offered through cloud computing.

Fundamental Units of Cloud Infrastructure

Any cloud infrastructure typically offers different types of resources to users. These resources fall mainly under Compute, Storage, and Networking.

Compute

Compute is the most prevalent resource within a cloud computing environment. It represents the raw computing power that can execute instructions for a program to perform some processing.

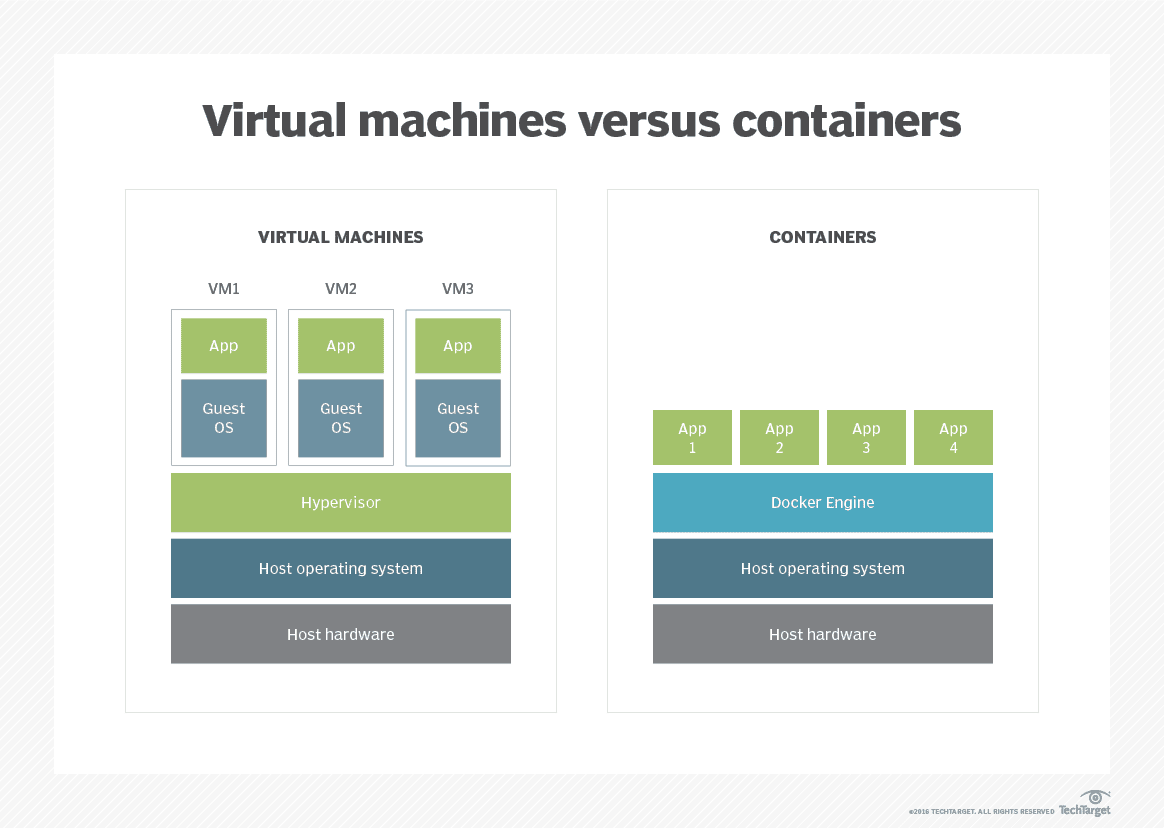

However, in the context of Cloud Computing, this computing power is not deployed merely as a physical computer alone. That’s because deploying compute resources through the physical provisioning of hardware is not scalable and cannot be fully automated. The technological revolution that makes the compute resources scale is based on two concepts: Virtualization and Containerization.

Virtualization is a process that enables the creation of a virtual version of a compute resource or device, such as a server. It uses a combination of software and firmware layers over the physical hardware to spawn multiple virtual computing environments. These virtual environments behave like a separate physical computers with an operating system. This is possible using hardware virtualization. It is achieved by replicating the host computer using a hypervisor or Virtual Machine Monitor (VMM) software, creating a guest virtual machine that runs an operating system and applications like a physical computer.

Containers allow applications to run in an isolated environment with provisioned resources prescribed at the time of their creation. A container runtime engine, such as Docker, provides the necessary resources to run a containerized app as instructed in its image file. Container runtime starts an app in a standard, secure and isolated environment to ensure that the application is run according to the predefined rules no matter where they are running. It is secure from unauthorized entities so that other running processes do not affect the app.

Source: https://www.techtarget.com/searchitoperations/tip/Explore-the-benefits-of-containers-on-bare-metal-vs-on-VMs

Virtualization and Containerization are different approaches to deploying multiple computing environments within a single physical system. Both operate at different levels. Virtualization creates virtual machines (VMs), complete, standalone environments that include a full copy of an operating system (OS) and the application software. In contrast, Containerization is a lighter-weight approach, wherein containers share the host system's operating system and encapsulates the application and its dependencies into a self-contained unit.

Storage

All applications need their data to be persisted so that it does not disappear when the application is restarted or undergoes upgrades or migrations. In the terminology of cloud computing, it is generally referred to as storage, and the actual deployment happens in the form of persistent storage that hosts a filesystem or a database.

This storage is provisioned in a hard disk or solid-state storage hardware. Magnetic tape storage is also used for large scale data archival Many such clusters of storage hardware are inherently bound to form a cloud storage infrastructure to build cloud data storage, data warehousing, and data lake systems. A wrapper over these storage systems is implemented using various modern tools to offer a standard cloud storage service interface. Such interfaces offer additional features like auto-provisioning to align with auto-scaling, data security, and backup/restore options.

It is also possible to offer storage as virtualization. This is the pooling of physical storage from multiple network storage devices into what appears to be a single storage device.

Networking

A network resource represents a combination of hardware and software, facilitating communication and messaging between multiple cloud resources. These are typically specialized compute resources that perform networking functions such as message routing, forwarding, and firewall operations.

Network resources play an important role in integrating an application.

Source: https://blog.knoldus.com/know-about-cloud-computing-architecture/

All together, cloud networking resources bind the various components of the application running as separate computing entities across servers, mobile devices, databases, and external cloud services.

These resources are deployed as load balancers, application gateways, VPN, and CDN. At the same time, some of these resources act as a layer over the application traffic, such as routing and DDoS protection layers.

Different Forms of Cloud Infrastructure

A cloud infrastructure comprises an amalgamation of one or more types of cloud resources bundled as a service. These services are provisioned in different ways to make them accessible over the Internet and meet customers' demands. They offer easy and affordable access to cloud resources without the overheads of on-premises infrastructure or provisioning of hardware or software systems.

Infrastructure-as-a-Service (IaaS)

IaaS is a cloud service where a particular type of cloud resource is rented out as a bare-bone system to customers to deploy and run their applications. The essence of IaaS is to offer the customers the hardware and the essential software stack, such as the OS and networking layers. This way, customers can spend little money to purchase, build and upgrade their hardware and OS licenses.

In addition to the basic IaaS, it is possible to offer additional features over the infrastructure to manage scale, availability, and redundancy. Managed cloud infrastructure hosting takes care of these overheads via cloud middleware to package an IaaS offering in customized ways.

One of the newer breeds of IaaS is around the concept of cloud-native. Cloud-native applications are designed to take full advantage of the cloud's elasticity, allowing applications to be deployed more rapidly and efficiently without being constrained by hardware and OS dependencies. Elasticity ensures that the cloud resources required to host an application can be rapidly scaled up or down efficiently. Such flexibility is offered by containerization technology. Therefore, cloud-native applications are deployed as containers in an orchestrated setup consistent across different cloud providers.

Platform-as-a-Service (PaaS)

PaaS is a form of service that is one level of abstraction below IaaS. While using IaaS, developers still have to perform many activities to deploy and host the underlying dependencies for an application. PaaS provides a boilerplate layer of services for developers to speed up most of the workflows associated with the deployment of cloud resources. For example, when using an IaaS for hosting a database, developers have to install the database platform on a compute resource and provision the database on a storage resource. In the case of PaaS, they get a managed database service that is ready to deploy at a click of a button, without worrying about the underlying cloud resources. In addition, PaaS can be viewed as a complete engine for developers to design, build, deploy, monitor, and maintain applications on the cloud.

On the one hand, the common PaaS services provide a customized, vertically integrated software stack over IaaS. On the other hand, there are also some specialized PaaS offerings, such as:

- 1iPaaS: Integration Platform as a Service is a cloud-based service that enables businesses to execute, develop, and govern the integration flows connecting various applications and data sources within cloud-based and on-premises environments. Most use cases of iPaaS are centered around data and process integrations, real-time communication, and security. In many ways, iPaaS is a more concrete implementation of a networking resource.

- 2aPaaS: Application Platform as a Service is a cloud service that provides a platform for users to develop, run, and manage applications without the complexity of building and maintaining the infrastructure typically associated with developing and deploying an application. aPaaS can support the complete web application lifecycle: building, testing, deploying, and managing applications. These services are primarily designed to allow programmatic control over development and deployment that abstract away the complexities in managing cloud resources. This way, developers can manage their workflow rather than deal with servers, storage, network, and databases needed to run the application.

- 3xPaaS: The term "xPaaS" is a collective term used to represent the whole variety of different "Platform as a Service" (PaaS) offerings available. The "x" stands for anything and can be replaced by a particular cloud resource combination or service offering. Some of the well-known examples are dbPaaS (Database Platform-as-a-Service), mPaaS (Mobile Platform-as-a-Service), and bpPaaS (Business Process Platorm-as-a-Service).

Similar to IaaS and PaaS, there is SaaS, which is one abstraction below PaaS. SaaS offers a fully functioning software product, delivered via a web-based or API-driven interface, without programmable options to customize the underlying cloud resources. Therefore, SaaS is meant to be used by the end users rather than developers who could otherwise tweak the underlying cloud resources.

In the case of cloud infrastructure, SaaS acts as a provisioning and monitoring interface to manage the underlying cloud resources. This is separate from the plethora of general purpose SaaS applications that are otherwise available, ranging across hundreds of categories such as finance, travel, productivity, office, creative, and more.

Cloud Infrastructure Deployment Models

The deployment model governs the management of the physical infrastructure for the cloud. Based on the needs of an enterprise, or indie developers, many deployment strategies exist to meet the specific needs. Additionally, each model offers different levels of control and flexibility for configuring the various aspects of cloud computing resources and services.

Public Cloud

A public cloud deployment leverages a third-party cloud service provider. Amazon Web Services, Microsoft Azure, and Google Cloud are the notable players in this space. Deployment on a public cloud entails subscribing to shared cloud resources and platforms hosted by one of these providers.

The public cloud offers on-demand scalability and zero on-site maintenance. At the same time, it enforces a lock-in with the provider and incurs higher costs later if the cloud resources are not managed well.

Private Cloud

In this deployment model, the physical infrastructure for hosting the cloud resources is owned by the organization wanting to deploy its applications instead of relying on a cloud service provider. Therefore, maintaining such infrastructure is also the organization's responsibility and is typically done on-premises or with a dedicated infrastructure hired from the cloud provider.

The private cloud deployment model becomes necessary when some regulations exist, such as data security measures. In terms of capabilities, this model is almost the same as the public cloud. However, it is costlier than the public cloud, even though it offers more flexibility and customization.

Hybrid Cloud

A hybrid cloud deployment model blends public and private cloud deployment models. In other words, this model allows organizations to host some applications or data in the public cloud while keeping other applications or data in a private cloud or on-premises environment. Both deployments are unified using an orchestration layer to create a scalable environment that takes advantage of all a public cloud infrastructure can provide while maintaining control over mission-critical data.

With a hybrid model, companies can utilize the cost-efficiency of public clouds for less-sensitive operations and reserve their private cloud for important operations, optimizing their IT budget. But at the same time, implementing a hybrid cloud requires considerable technical expertise. It requires managing and integrating multiple platforms, which can add complexity to the underlying IT infrastructure.

Serverless Deployment

While it is not a model for deploying entire cloud infrastructure, serverless still assumes a lot of importance when deploying compute resources. Serverless, also known as Function as a Service (FaaS), is a cloud computing deployment model in which the cloud provider dynamically manages the allocation of compute resources without associating them with a specific server.

The term "serverless" is somewhat misleading because servers are still involved in hosting applications. However, the responsibility for provisioning, managing, and maintaining servers is entirely up to the cloud provider. The user is abstracted from the underlying infrastructure. This allows applications to be scaled automatically and lets developers concentrate on the business logic of their software rather than infrastructure management.

Serverless offers a much more granular pricing structure, wherein users are billed for actual consumption based on the execution time of their code.

Pricing Models for Cloud Infrastructure

Since most of the decisions surrounding cloud infrastructure boil down to the cost, pricing models play an important role in arriving at the optimum approach for infrastructure deployment.

Additional cost elements are also associated with individual cloud resources, such as data backup costs for storage, data transfer costs for networking, etc. Depending upon the overlaid PaaS and the variety of services offered by the cloud providers, the pricing structure can get more complicated.

Pay As You Go

This is the most prevalent pricing model in which cloud resources like compute, storage, and networking are billed based on actual usage, measured using certain parameters. For storage, the billing is based on average storage over a period of time. For compute, it is based on hours of uptime, or the number of seconds a code executes on the serverless system.

This model has the advantage that the user only pays for actual usage without investing beforehand. This is attractive for small to medium-sized companies as they can quick-start their businesses on the cloud without upfront capital investment. The downside of this pricing model is that the cost becomes exponentially high in the long run, even if the resource usage increases linearly.

Fixed Pricing

This is a fixed-cost subscription model, mostly applicable to platforms and services. This cost model is popular among PaaS cloud services in which there is a mix of multiple software and hardware elements. Usually, this model is combined with the Pay as You Go model for bundled offerings, such as a managed database platform, which charges a fixed cost for management and a Pay As You Go based cost for data storage. Companies that commit to a certain subscription length usually opt for this model to get discounts on overall pricing.

Reserved Pricing

In this model, users can reserve a certain amount of cloud resources for a long period, say one to three years. Consequently, the cost reduces, and companies can leverage a pool of reserved resources for building their applications. This model is suitable for projects with long-term horizons and can be combined with Pay As You Go model for access to extra resources in during peak loads.

Authorship / Research Credits