Cloud middleware refers to any software or hardware that enhances the capabilities of cloud computing infrastructure for hosting an application. These applications can range from hosted web apps, applications, or any software platform deployed using the "as-a-service" cloud hosting model.

What is Cloud Middleware?

Cloud middleware is a wrapper around the bare-bones cloud infrastructure. A basic cloud infrastructure only provides compute, storage, and networking facilities. However, the infrastructure undergoes frequent realignment and expansion due to traffic demands, security, and other deployment-related complexities. Cloud middleware is responsible for managing these dynamic characteristics of the infrastructure to maintain reliability and avoid downtime.

Cloud middleware is an invisible layer between the user and the cloud infrastructure. It performs multiple roles depending on the use case. The typical roles vary from being an orchestrator for fine-tuning the infrastructure components for optimum quality of service to ensuring interoperability with third-party systems to augment the infrastructure capabilities in specific ways. Cloud middleware is also important in managing geographical complexity, security, and operational workflows.

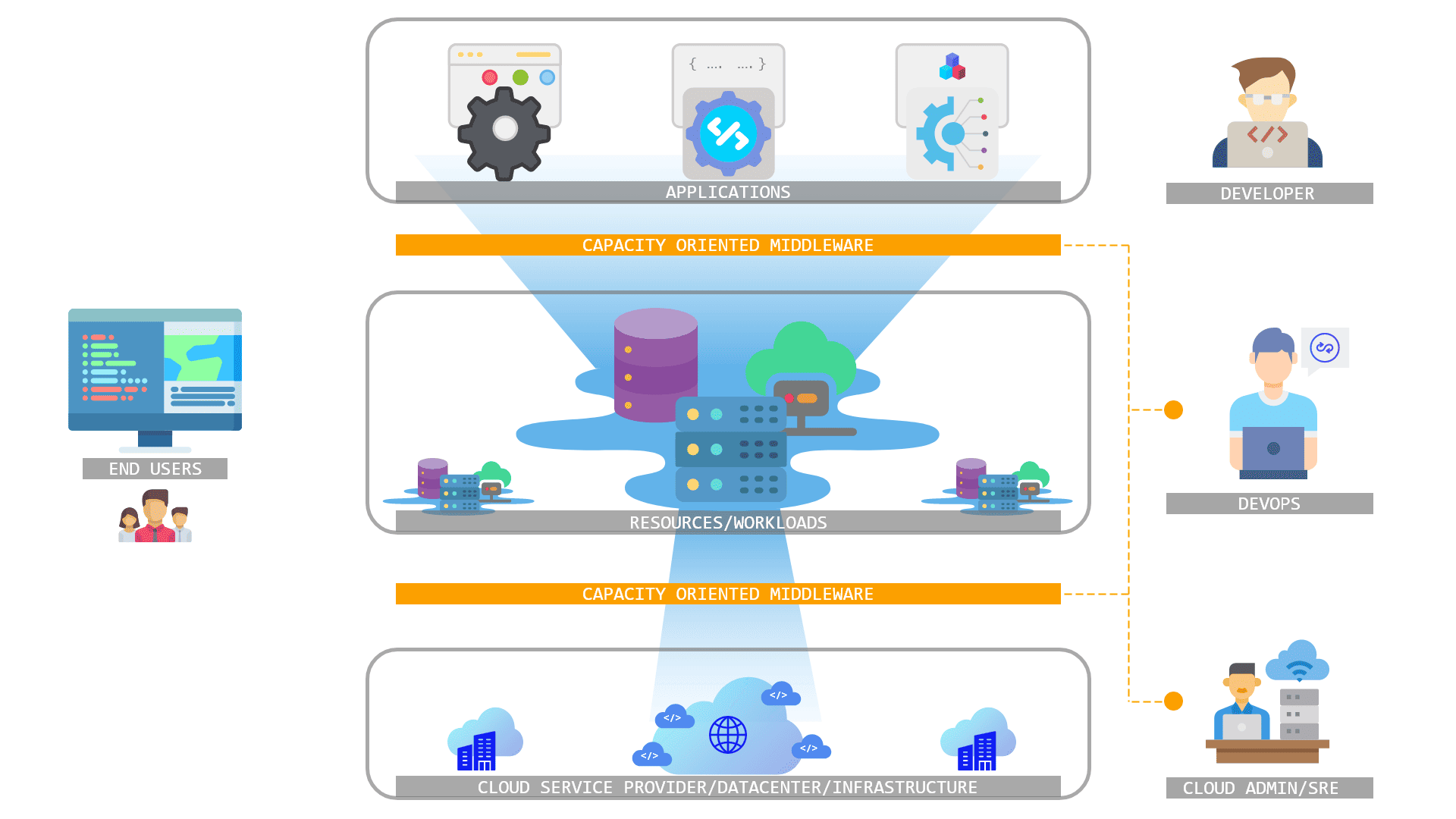

Capacity Oriented Cloud Middleware

Capacity oriented middleware refers to a cloud middleware responsible for managing the capacity utilization of cloud computing resources. It works alongside the cloud workloads to help ensure the right resources are allocated to the right tasks at the right times. Its primary responsibility is to align the capacity of the cloud infrastructure with the demands of the applications running on it.

The specific functions of a capacity oriented middleware can be categorized into:

- 1Deployment Automation: Allocation of cloud computing resources during the various stages of the application deployment and scheduling maintenance operations based on specific triggers.

- 2Scale Management: Automatic scaling of resources, up or down, based on traffic or other specific requirements.

- 3Reliability Management: Distributing workloads across multiple computing resources to optimize resource usage to ensure the application is always available.

- 4Performance Monitoring: Keeping track of vital parameters of the cloud computing resources and providing feedback to trigger adjustments.

Deployment Automation

For any application running on the cloud, the deployment of cloud resources is part of the initial provisioning. The entire deployment workflow can be codified by leveraging middleware to build a configuration for deploying cloud infrastructure comprising virtual machines, databases, and other platform services. In addition, security policies, data archival, disaster recovery, and other ancillary configurations are also included to ensure the application is guarded against any eventuality.

There are specific middleware tools that simplify the configuration and deployment of cloud resources. These tools interact with the cloud service provider’s or platforms’ provisioning layer to streamline the bringup process for compute resources, network settings, load balancers, and security policies and ensure reproducibility and consistency without human intervention.

Scale Management

Cloud infrastructure requires an on-demand scaling model for the provisioning of resources. Scale management ensures that the resources can be dynamically provisioned based on the quantum of traffic processed by the cloud workloads hosted on the infrastructure. Capacity oriented middleware handles this operation seamlessly so that the organization does not have to maintain a large 24*7 staff to create, configure, and manage cloud resources.

There are a few approaches to scaling. Vertical scaling can be achieved at an individual compute resource level by leveraging multi-core processors, threads, and non-blocking I/O to enable parallel request handling. On the contrary, horizontal scaling involves adding or removing resource instances from the infrastructure to rebalance the load.

All cloud service providers offer auto-scaling, which is the ability of the cloud service to automatically adjust the amount of computational resources based on actual usage. Public cloud services such as AWS and GCP have built-in platform services to handle scale, directly acting upon the infrastructure resources to manage scaling scenarios on an application's needs. In such cases, resources are pooled based on specific policies to achieve high concurrency.

Reliability Management

Reliability management concerns managing failures in the infrastructure. If something goes wrong, the capacity oriented middleware can help ensure the cloud service continues functioning correctly. Strategies for achieving reliability involve redundancy, where multiple resource instances are run so the others can take over if one fails.

Load balancing is a crucial function of reliability management. It ensures that workloads across multiple computing resources are optimized for maximum throughput and minimum response time while avoiding overload on any single resource.

Performance Monitoring

All the above functions of capacity oriented middleware can only be optimally executed if there is a way to track how well the overall workload performs and make adjustments as needed. This could involve collecting metrics like CPU usage, memory usage, network bandwidth, etc.

Performance monitoring is a crucial aspect of managing cloud environments. It acts as a feedback loop for triggering other scale and reliability management tasks. It is a continuous process to ensure that the infrastructure and workloads are always optimized for the best possible quality of service.

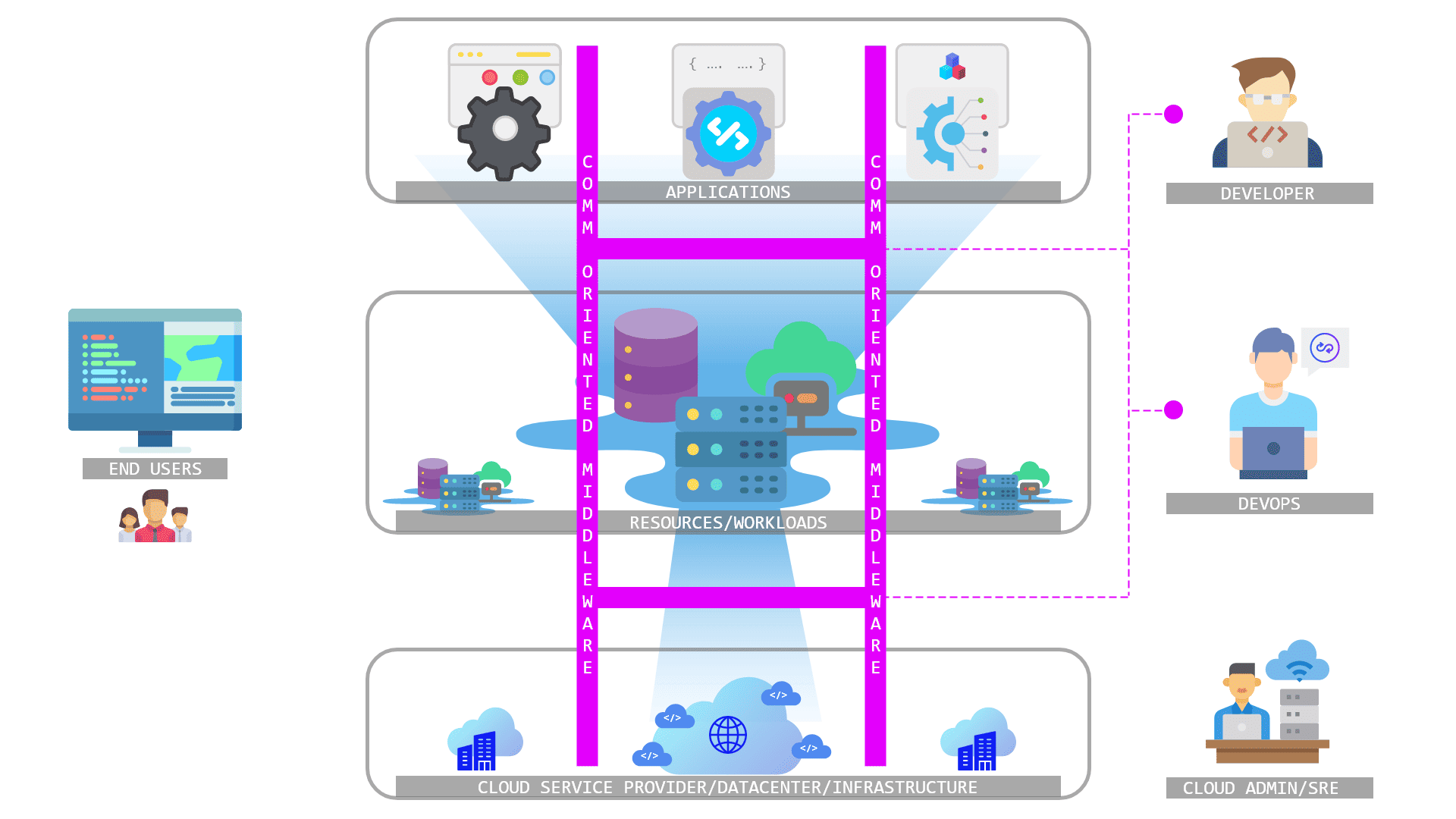

Communication Oriented Cloud Middleware

Communication oriented cloud middleware refers to a specific type of middleware designed to facilitate communication and interaction between different components, platforms, or application services in a cloud computing environment. It focuses on providing a scalable, secure, and resilient messaging substrate to connect and integrate distributed cloud applications.

Communication oriented cloud middleware can be categorized into the following types:

- 1Message Broker: Responsible for managing the messaging infrastructure and exchanging messages between cloud components.

- 2Protocol Bridge: Responsible for facilitating interoperability between multiple protocols to ensure compatibility in communication.

- 3Service Proxy: Responsible for acting as an interface or gateway between a client (such as a user, application, or device) and a backend service or server to mediate the communication between the client and the actual backend infrastructure.

Message Broker

A message broker is an intermediary software component that facilitates communication and data exchange between different applications, services, or components in a distributed cloud computing environment. The message broker decouples the producers and consumers of messages to ensure reliable and scalable message processing.

The key functions of a message broker are:

- Routing of messages: Message brokers receive messages from producers (senders) and route them to one or more consumers (receivers) based on predefined rules and policies. This routing can be one-to-one, one-to-many, or many-to-many.

- Ensuring quality of service: Internally, the message broker maintains a message queue to accumulate and dispatch messages based on specific priorities. It intelligently manages several queues based on specific messaging priorities, such as real-time or asynchronous notifications.

- Ensuring scalability: Message brokers must be designed to handle increased message traffic and support high-demand scenarios. Vertical scaling is adopted to increase the hardware capacity of the computing resources hosting the message broker. They can also be chained together to distribute the message load across multiple brokers for horizontal scalability.

- Ensuring reliability and security: Message brokers typically offer features like message acknowledgment and retry mechanisms to ensure the reliable delivery of messages, even in the case of network failures or application crashes. Additionally, they must implement security measures, such as authentication and authorization, to control access to the messaging system and protect sensitive data.

Given the scale of distributed cloud applications deployed using a cloud-native approach and microservices patterns, all message brokers adopt a scalable approach for handling messages.

For real-time messaging, the publish-subscribe pattern is ideal for managing many senders and receivers. Webhook is the most efficient approach for asynchronous events and notifications since it is based on the well-adopted REST API framework.

Message brokers implement one of these patterns along with open or proprietary protocols to implement the internal architecture of the message queuing and delivery mechanism. Apart from delivering messages over the internet protocol, some message brokers also support legacy channels such as SMS and email.

Protocol Bridge

A protocol bridge is a middleware component that enables interaction and data exchange between incompatible endpoints (services or applications on a distributed cloud deployment) that use different communication protocols. The primary purpose of a protocol bridge is to facilitate communication and data exchange between these endpoints by translating data between disparate protocols.

The key functions of a protocol bridge are:

- Protocol Translation: The primary role of a protocol bridge is to translate data between different communication protocols. This enables an application using one protocol to communicate seamlessly with an application using another protocol.

- Data Format Conversion: Protocol bridges may optionally perform data format conversions. For example, they can convert data between different data serialization formats, such as JSON, XML, or binary formats.

- Communication Transparency: A Protocol bridge has to maintain transparency in communication such that the users or applications interacting with a protocol bridge may not be aware of the underlying protocol differences. As part of this handling, the protocol bridge also ensures that there are no delays or data corruption in the process of protocol translation.

Protocol bridges are mostly used for interoperating with legacy systems or translating between incompatible data formats. Examples of protocol bridges being used for legacy systems are the cases of IoT and industrial automation, where there is a need to interface modern software with legacy equipment. Incompatible data format also arises in similar situations where there is a need to convert from binary to text-based format or vice versa.

Some special forms of protocol bridges also operate at the network layer. They are used in network security and firewall configurations to allow specific protocols to pass through while still enforcing security policies. They may inspect and manipulate the protocol traffic for security purposes.

Service Proxy

The service proxy is a middleware component that acts as an intermediary between the clients and backend services and controls the interactions between them. It essentially mirrors the backend interface to enhance the security, scalability, performance, and manageability.

The key functions and characteristics of a service proxy are:

- Load Balancing: Service proxies distribute incoming client requests across multiple instances of a backend to achieve load balancing. This ensures efficient resource utilization and improved system performance. Optionally, service proxies may also perform request and response transformation to modify or adapt requests and responses as needed, including data format conversion, request routing, or header manipulation. This is especially useful for clients that require specific formats, or their requests have to be forwarded to specific backend applications.

- Rate Limiting: To prevent abuse or overuse of an application service, service proxies can enforce rate-limiting policies, restricting the number of requests a client can make within a certain time frame.

- Security: Service proxies enforce security measures to protect the backend from unauthorized access and potential security threats. They often handle tasks like authentication, authorization, and encryption to ensure that only authorized clients can access the application service.

- Service Discovery: In cloud and microservices environments, proxies can assist in dynamically discovering and locating backend services. This is especially important in a dynamic, containerized environment.

- Caching: Service proxies can cache responses from the backend service, reducing the load on the them by serving cached data to clients when applicable. Caching can improve response times and reduce the need for repeated requests to the backend.

Service proxies play a vital role in modern software architectures designed around APIs. They help define a clear API interface that hides the complexities of interacting with multiple backend services behind the scenes. In this way, they provide a unified interface for clients to access backend services.

Modern web servers such as Apache and NGINX can be configured as a service proxy. API gateway is a specialized form of service proxy for serving APIs. Apigee, AWS API Gateway, and Azure API Management are some of the popular API gateways.

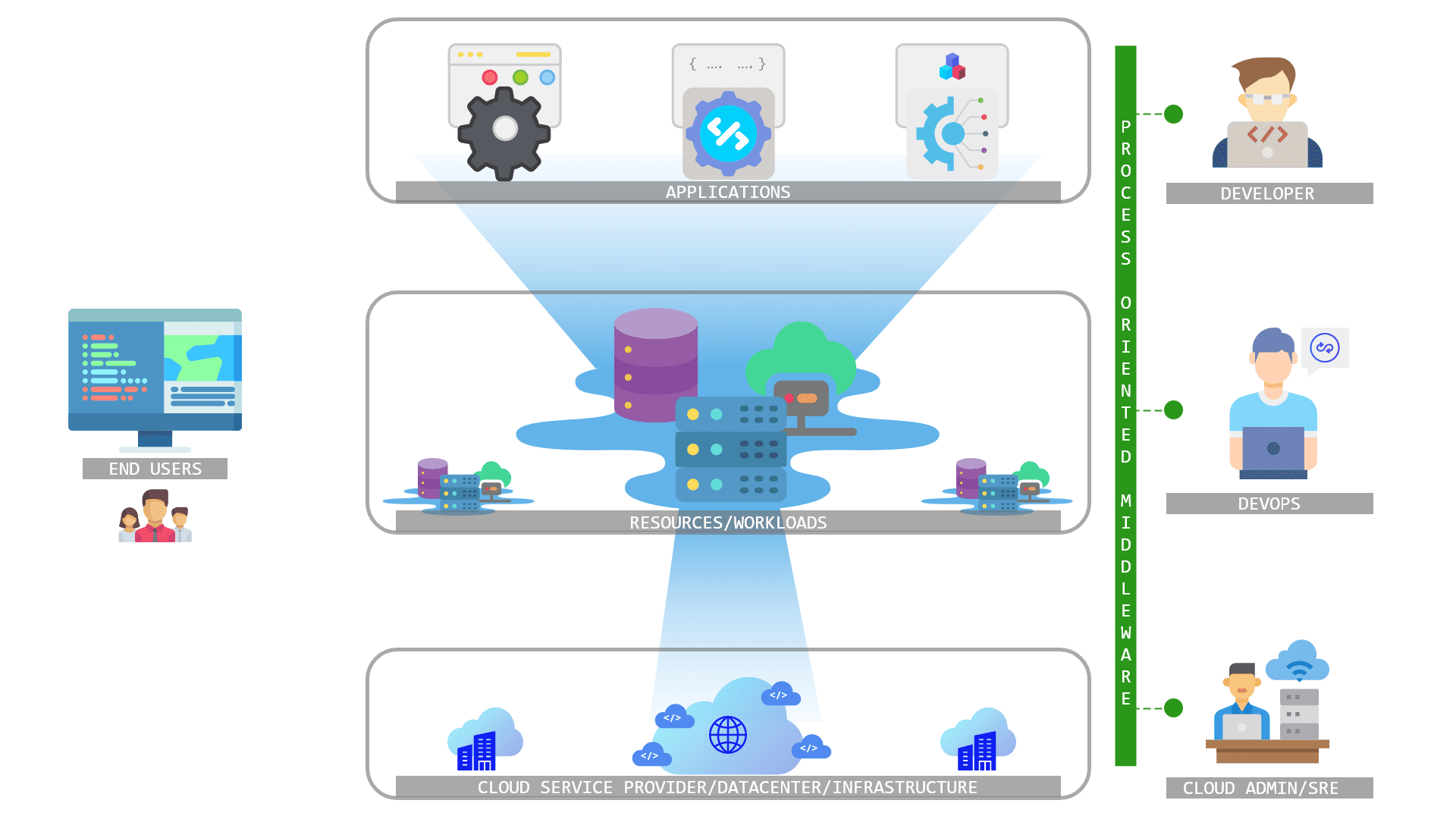

Process Oriented Cloud Middleware

Process oriented cloud middleware is a hardware or software system that facilitates the interaction between the cloud infrastructure and the operations teams for streamlining the processes related to managing the infrastructure on a day-to-day basis. They play a crucial role in automating, orchestrating, and managing various aspects of the deployment process as part of DevOps and help ensure consistent and streamlined deployment pipelines for production applications.

Here are some key aspects of process-oriented middleware:

- Workflow Automation: Process oriented middleware enables the design, modeling, and execution of workflows and processes involved in continuous integration and continuous deployment of cloud applications. These workflows often involve multiple tasks and checklists that must be sequenced and coordinated to achieve streamlined deployment and maintenance.

- Environment Management: These middleware also manage various environments that are used for hosting the production applications and additionally for staging, testing, and development. There are also ancillary environments for disaster recovery and high availability, which have to be spawned from time to time to handle specific situations.

- Monitoring and Analytics: One of the important maintenance chores of this middleware is to monitor application usage, efficiency, performance, and costs. It interfaces with the cloud infrastructure to continuously extract logging and analytics reports to track the health of the infrastructure. Keeping the costs in check while ensuring maximum utilization and throughput of the cloud applications is a core requirement for this middleware.

Overall, process oriented middleware in the cloud plays a crucial role in streamlining and automating DevOps processes and application release cycles, making them more agile and adaptable to changing business needs. It leverages the capabilities and resources of the cloud to enhance efficiency and scalability while ensuring reliability and security.

In some ways, there is an overlap between process oriented middleware and capacity oriented middleware. There are certain tasks related to automation, environment management, and performance monitoring which are actually the responsibility of capacity oriented middleware. However, they may be triggered by process oriented middleware.

Other Forms of Cloud Middleware

Deploying and maintaining cloud based applications at a global scale has additional challenges. Therefore, some purpose built middleware platforms serve a specific purpose to ease these challenges. Here are some of the important complexities in global cloud deployment, where cloud middleware has an important role.

Managing Hybrid or Multi Cloud Deployment

To ensure 24/7 availability, reliability, and speedier access from anywhere in the world, many applications are hosted based on a hybrid or multi cloud deployment model. A hybrid cloud infrastructure blends two or more different types of clouds, such as public and private clouds. Multi-cloud refers to the use of two or more public cloud providers, such as AWS, Azure, and Google Cloud.

Both approaches have pros and cons regarding critical factors such as cost and security. However, one thing that adds to the burden for both forms of deployment is the complexity of management. These complexities span across:

- Integration: Different cloud deployments may have different architectures, configurations, and security requirements. Integrating such cloud environments requires additional middleware processing to ensure seamless communication and data exchange between them.

- Migration: As enterprises move their workloads and data to and from different cloud environments, there is an additional overhead of migration, which leads to delays or errors in deployment. With specific middleware interventions, migration activities can be smoothened to ease the day-to-day maintenance of workloads.

- Compliance: Hybrid or multi cloud deployments can raise regulation and compliance concerns, as they involve managing sensitive data across different cloud environments hosted in different parts of the world. Ensuring regulations and compliance across different cloud environments requires middleware tools that can audit, provision, and recommend changes in the deployment configuration to ensure strict adherence.

Managing Geographical Distribution

A geographically distributed deployment provides diversified infrastructure and granular control over geographic traffic routing. For example, applications need to efficiently route the delivery of digital content to visitors in a country from the physically nearest possible location to ensure fast and reliable access.

Middleware platforms and tools are used to manage the complexities related to geographically diverse deployments, such as:

- Multi-location Management: Managing a cloud deployment spanning multiple locations is itself an overhead for operations and maintenance (O&M) since there are several workflows that span across two or more regions. Middleware tools have a scope to simplify these workflows to ensure a smooth and efficient OA&M.

- Disaster Management: Middleware platforms also play a key role in decision support for disaster management by integrating data and services from multiple geographies.

- Edge Infrastructure: Middleware platforms are used to orchestrate the data exchange and connectivity between a central cloud infrastructure and location specific edge infrastructure.

Managing Reliability and Quality of Service

A typical cloud infrastructure can provide minimal guarantees for application uptime and service availability based on the configuration of capacity oriented middleware. However, there are certain critical systems, such as safety or mission-critical systems, which require additional guarantees in the form of reliability and quality of service.

In the context of cloud computing, reliability is measured in terms of the deployment uptime. Similarly, quality of service is measured in terms of the response time. Middleware platforms can be leveraged to implement distributed redundancy, which is the key to achieving better reliability and quality of service.

Cloud Middleware as a Service

Similar to the usual ‘as a service’ model from cloud service providers, cloud middleware platforms are also available under the same model.

All the major forms of cloud middleware, such as capacity, communication, and process oriented middleware platforms, are available as managed services running on a cloud platform and offer scalability, connectivity availability, and cost-efficiency. They can also integrate with various cloud services, such as storage, databases, analytics, and machine learning.

However, a few specific types of middleware-as-a-service platforms have taken off in recent times.

Integration Platform as a Service (iPaaS)

iPaaS, or Integration Platform as a Service, is a cloud-based platform that allows organizations to build integrations between diverse and disparate applications, processes, and data, whether in the cloud or on-premises. iPaaS makes communication between application workloads and process automation easier, thereby simplifying the data flows between collaborating applications.

Environment as a Service (EaaS)

EaaS, or Environment as a Service, is a cloud-based service that provides pre-configured environments for developers to build, test, and deploy applications. EaaS simplifies the process of environment provisioning by providing preconfigured environments and server configurations, saving time on manual tasks.

Function as a Service (FaaS)

FaaS or Function as a Service is a specialized form of middleware that provides an ephemeral computing environment for developers to write, deploy and execute code as a self-contained function. Unlike a typical application code that is deployed on a pre-provisioned infrastructure, FaaS offers an on-the-fly alternative, wherein the developers only have to worry about the business logic of the code that connects the frontend application to the backend data storage. In this way, FaaS envisages the backend as a middleware between the user and the database.

Trends in Cloud Middleware

The identity of a middleware depends on the context. Essentially, it is a glue software that connects two disparate systems. We experience them on a day-to-day basis when we use a computer. For example, humans and computers do not speak the same language. Therefore, the operating system acts as the middleware. The same goes for programming languages, which translate human language into machine.

During the days of desktop computing, middleware was limited to OS-level modules, shared libraries, and tools. But in today’s cloud and distributed computing era, middleware has a more complex functionality to perform, as we have seen above.

Some of these functionalities have become so common that they are now ingrained in the programming language or infrastructure directly so that developers and software architects do not have to deal with additional components in the form of middleware. For example, API calls are so ubiquitous in a modem cloud-hosted application that some programming languages, such as Ballerina, have incorporated API calling and error handling as built-in features, which were otherwise handled through a middleware library or a separate platform.

Today, the scope of cloud middleware exists in the form of

- Application Integration: iPaaS and similar platforms that stitch together user and system workflows across multiple applications.

- Infrastructure Automation: Infrastructure as Code platforms that handle all complexities related to deployment, hosting, and orchestration between workloads.

- Internal Developer Platform: A developer friendly middleware platform that streamlines a lot of the DevOps workflows in managing multi cloud and hybrid cloud deployments.

- Security: A whole host of security middleware platforms and tools that perform checks, audits, and automated remediation related to multifarious security threats.