As the internet grows more complex, distributed, and the number of internet-connected devices increases, it’s become even harder to control for fast, reliable, and secure application delivery. As applications now form the backbone of how we work, play, learn, and stay connected in our digital world, application traffic management is a key issue to solve.

This post was originally published in NS1.

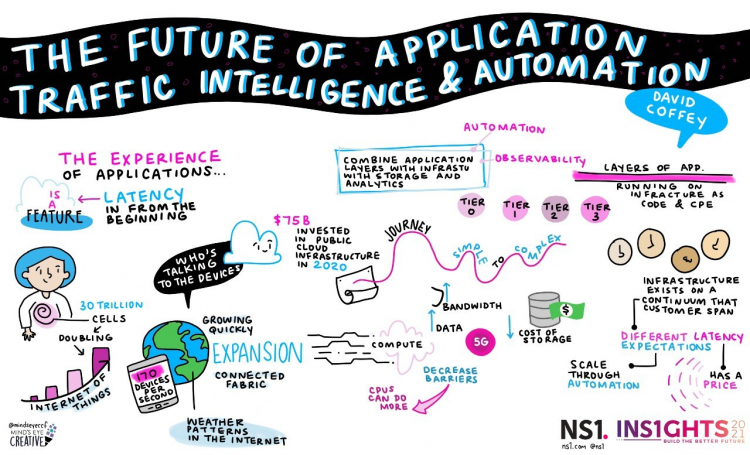

In particular, controlling for low-latency – a critical part of an exceptional application experience – has not only become more complicated, but also more important. In today’s environment, latency can no longer be treated as an issue to solve on the other side of development or something to deal with as you roll out into production. Instead, it now needs to be treated as a tier one feature, fundamental to the success of your application and the user experience.

At INS1GHTS 2021: Build the Better Future, our CPO David Coffey provided an in-depth look at what has changed in application delivery environments, and why it requires a new approach to latency. Keep reading for key takeaways from his session, or watch the full session below for a deeper dive.

Change #1: Application Audiences Are More Distributed

First, your audiences are more geographically distributed than ever before. Application delivery environments need to cover more territory, making it more difficult to control for low-latency.

For example, your employees are working from remote locations, not corporate headquarters. Your customers are accessing applications and websites from smartphones on cellular networks, home WiFi, and so on. Some of this is driven by decreasing cost, and increased performance, of bandwidth. 5G, for example, costs an order of magnitude less today than 4G and LTE did. With 5G, it now makes economic sense to transport large quantities of data wirelessly.

The number and variety of devices in your network environment has exploded as well. By some counts, about 170 new devices connect to the internet every second. This makes it even more challenging to ensure performant delivery.

Change #2: Application Delivery Environments Have Gotten More Complex

In response to this and other trends, networking infrastructure has grown more complex. Gone are the days of a centrally managed data center and application stacks. Today, companies rely upon private / public cloud, on-prem, serverless devices, containers, and so on.

Pushing your infrastructure closer to your end-users helps control for latency in some respects – however, increased complexity without automation also increases the risk for outages and issues, as human errors or bugs are magnified.

There are also more factors at play that can increase latency outside your control. Your cloud or multi-CDN provider can experience an outage – whether due to a DDoS attack or human error – and can in turn take down your site or application (unless you have invested in redundancy within your infrastructure). The device or network your user is accessing your application on may have issues of its own (i.e., slow home WiFi network or poor cellular connection).

Change #3: Operating Models Have Evolved

Given the changes above, operating models have had to change as well. DevOps and IaaC make it possible to deploy apps across distributed environments; however, they require infrastructure that is API-first and enables automation. Automation is now key because without it, it’s nearly impossible to scale up your human resources to manage such a complex, dynamic system.

Visibility of your entire environment is critical to ensuring reliable and fast application delivery. Yet collecting, measuring, and analyzing data on the performance of your entire application delivery environment has grown incredibly complicated. Without API-first infrastructure, it’s nearly impossible to sift through all the data from different pieces of your infrastructure and draw conclusions from it within an actionable time frame.

A New Way to Approach Low-Latency in Applications

Low-latency is not an attribute, it’s a feature. It is a top tier one priority for people to accomplish, as latency is a critical part of the end-user application experience. Today’s application audiences have no tolerance for lags, which in turn means performant application delivery is now paramount to business success.

However, it is an incredibly complicated aspect, given all of the frameworks that are out there, the tools, the DevOps capabilities and the components required in order to support and deploy these modern day applications.

NS1 enables companies to deliver applications with low latency and treat it as an attribute in the system from the beginning – not an issue to resolve after deployment. We enable developers to easily embed low latency into their system, and we enable them to deliver an exceptional application experience to their customers and employees.