Have you ever wondered, how does a retail store keep track of its shelves? A retail store spread across a large area requires a lot of operational staff to manage the shelves. Replenishing the stock is one of those essential chores that ensure that the business runs without any halt. In this blog post, we are going to talk about how technology can help in predicting when does a store shelf need to replenish itself. We are going to showcase automated stock replenishment as a case scenario for applying machine learning in retail industry.

Introduction

In today’s highly competitive retail landscape, being able to control and optimize retail execution at the point of sale has never been more critical.

In-store audit solutions today are manual and time-consuming. Auditing takes approximately 15 minutes per product category, involving physical measurements that are prone to human errors and inaccuracies. It is an expensive solution and only a handful of the traditionally conservative FMCG players have embraced progressive technology to achieve this.

While purchase patterns can be obtained from the cash registers, measuring the shelving execution standards is a much more complicated task. The industry’s ability to grow and compete has been challenged by infrastructure, increased competition, and most importantly, the absence of effective tracking and analysis tools for retail execution in the stores.

When we ask questions about size, flavor, geographies, demographics, temperature, and so on, we understand how much intelligence resides in the supply chain of retail goods. What makes a brand more or less successful comes from its ability to turn all that information into actionable knowledge. So how do we do this?

Machine Learning in Retail

Measuring the shelving execution standards is a much more complicated task than following larger sources of market information to gather data.

With image recognition technology, manufacturers and retailers can now understand the marketplace and react in real-time. Using image recognition can result in time-saving of audit process with better accuracy. This means you will have accurate and reliable data on your distribution, know which items are out of stock and the share of your products within the category as well as a wealth of other actionable insights at your finger tips.

Automated Stock Replenishment

Shelf-out-of-stock is one of the leading motivations of technology innovation towards the smart shelf of the future. Traditionally, for stock replenishment, visual check of shelves is performed by store staff, using a task known as planogram compliance monitoring. With image recognition capabilities, the in-store CCTV cameras can be leveraged to detect and count front-facing products at category, range, brand or SKU level to assist the store staff in this tedious process. This innovation takes the human error and processing scalability out of the equation.

This technique can also be applied to real-time, automated supply replenishment system of shelves in warehouses for fulfilling online orders. This can be a big game changer because when you are selling billions of products around the world, even a small percentage of variance has a significant impact.

Current Offerings

Amazon Go

Amazon Go uses cameras disseminated all around the store, with real-time video analysis by artificial intelligence. Learning from Amazon Go, retailers can eliminate the check out queue by progressively increasing their use of AI in image/video analytics.

RELEX Solutions

Their technology helps to optimize stock levels based on the priority or role of each SKU to maximize sales and shelf availability while minimizing inventory and waste. Thay can also integrate with planograms to ensures well-stocked shelves and sends alert when space/demand mismatches occur.

CribMaster

CribMaster offers a solution for automated replenishment and purchasing, as soon as the stock reaches its defined minimum level. It also helps in Preventing stock-outs, overstock and dead stock & increasing inventory turns.

The Typical Setup for Retail Shelf Monitoring

Image of the shelf can be captured via surveillance cameras and real-time reports can be pushed into the POS (Point of Sale) of merchandise within few seconds of the data capture. These reports can cover critical areas like shelf share, market operating price of self and competition, scores of stores, out of stock incidence of core SKUs as well as POSMs.

Proof Of Concept for Automated Stock Replenishment

We have built a small PoC to demonstrate how the system might work in a real world scenario.

For the POC, we took image examples of Nutella jars kept on a shelf from Google and trained our algorithm with ten such images. In particular, we have followed the steps to train the kind of sliding window object detector, that was first published by Dalal and Triggs in 2005 in the paper titled, “Histograms of Oriented Gradients for Human Detection”.

The PoC scope is only limited to counting the Nutella jars on the shelf. Hence the algorithm will be trained to recognize the number of Nutella jars in a shelf image and report it back as a printed display.

Let’s look at some of the machine learning techniques and tools that we are going to use for building this automated stock counting POC.

Image Recognition using SVM and HOG

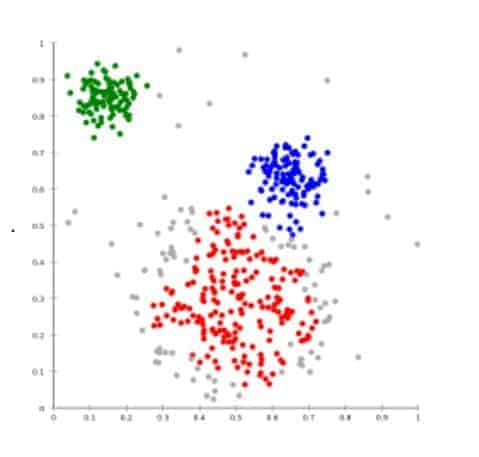

Support Vector Machine (SVM) is a supervised learning algorithm which is used for classification and regression analysis of data-set through pattern matching. The data in the objects/images need to be converted into an n-dimensional vector with each coordinate having some numerical value. In short, the object is represented in its numerically representable form.

One of the key parts where such an algorithm is required is to find feature sets for robust visual object recognition, adopting linear SVM based object detection as a test case.

Histograms of Oriented Gradient (HOG) is a feature descriptor used in computer vision and image processing for the purpose of object detection. The technique counts occurrences of gradient orientation in localized portions of an image. This method is similar to that of edge orientation histograms, scale-invariant feature transform descriptors, and shape contexts, but differs in that it is computed on a dense grid of uniformly spaced cells and uses overlapping local contrast normalization for improved accuracy.

DLIB: The C++ Toolkit for Machine Learning

Dlib is a modern C++ toolkit containing machine learning algorithms and tools for complex algorithmic analysis. It is used in both industry and academia in a wide range of domains including robotics, embedded devices, mobile phones, and large high-performance computing environments.

Dlib is a collection of useful tools and it is dominated by machine learning. In particular, the face detection, landmarking, and recognition example programs are now probably the most popular parts of Dlib which are ported to Python as well and a very good entry point to test and experience object detection. It has been ported to Android as well.

It has some good Machine Learning algorithms for image classification and detection. Some of its key features in computer vision are.

- Routines for reading and writing common image formats.

- Automatic color space conversion between various pixel types

- Common image operations such as edge finding and morphological operations

- Implementations of the HOG, and FHOG (Felzenszwalb HOG) feature extraction algorithms.

- Tools for detecting objects in images including frontal face detection and object pose estimation.

- High-quality face recognition that also works with OpenCV

Let’s check on the Nutella jars

So you are the store manager and you realize that Nutella is selling like hot cake. It’s time now to take stock of the inventory displayed on the shelves. Let us head over to this video demonstration.

As you can see in this video, with every image, the count of identified Nutella jar is printed in the console. Like every machine learning problem, the accuracy of the program boils down to the level of training.

In this demo, the images containing less number of jars are more accurate. Accuracy also deteriorates when the jars are presented at an angle. But this can be improved with enough training such that the system can detect the jar shown at any angle.

Object Counting Project Source

It’s time now to dig into some super technical stuff that works behind the scenes to make the object counting work.

We have hosted the entire demo source code of this PoC in GitHub with README file for instructions on installing and running the program. You need the Python 3 environment for running this code.

Code Walkthrough

Check out the main demo python script and follow along with this code walkthrough.

Everything starts with the import of necessary library modules.

In this demo, we have trained an object detection algorithm based on a small dataset of images in the training directory.

The train_simple_object_detector() function has a bunch of options, all of which come with reasonable default values. The next few lines go over some of these options.

Since objects are left/right symmetric, we can tell the trainer to train a symmetric detector. This helps it get the most value out of the training data.

The trainer is a kind of support vector machine and therefore has the usual SVM C parameter.

We also need to tell the code how many CPU cores your computer has for the fastest training.

Now let’s get to the actual training. The function train_simple_object_detector() does the actual training. It will save the final detector to a file, detector.svm. The input is an XML file that lists the images in the training dataset and also contains the positions of the object boxes.

To create your own XML files, you can use the imglab tool which is part of DLib. It is a simple graphical tool for labeling objects in images with boxes. For this demo, you can use the pre-created train.xml file in the train folder.

Now that we have an object detector, we can test it. The first statement tests it on the training data. It will print the precision, recall, and then average precision.

However, to get an idea if it really worked without overfitting, we need to run it on images it wasn’t trained on.

Now let’s use the detector as you would in a normal application. First, we will load it from disk.

Now let’s run the detector over the images in the objects folder and display the results.

Conclusion

Unlike other object detectors in OpenCV or other libraries, the number of training images used in Dlib was less and still the results were good. We got Nutella jars detected in almost 88% of images and in almost 70% of the images where jars were detected, the number of jars detected was good. And we got these results despite the fact that the images used for training and testing were diverse and did not represent the same environment.

The good part of Dlib library is that it can even run on small devices like Android phones and even Raspberry Pis which make object detection solutions on Dlib handy and usable for IoT applications.

Cover Image Courtsey, George Tsartsianidis

Has diffficulty in installing dlib